Semantic Embedding Augmentation of USDA’s Food Nutrient Imputation test#

INTRODUCTION#

The USDA National Nutrient Database for Standard Reference provides comprehensive nutrient content information for approximately 7,800 foods and 150 nutrients. While this dataset could theoretically contain around 1.17 million food-nutrient pairings, only 31% (~360,000) are direct measurements. The remaining data is either missing (45%) or estimated through USDA imputation methods (24%).

This research aims to develop machine learning models to predict missing nutrient values in the USDA database. Rather than addressing all nutrients simultaneously, we will focus on a single nutrient (selected through exploratory data analysis) to demonstrate the potential of our approach. The models will leverage existing nutrient measurements and food name description embeddings as predictive features.

The primary objective is twofold: first, to accurately predict the selected nutrient’s content in foods based on other available data, and second, to compare our model’s predictions against USDA’s current imputation methods. We will train our models exclusively on measured data, holding out 20% of foods for testing, to enable direct comparison with USDA estimates and evaluate the model’s generalization capabilities.

This research could potentially improve the quality and reliability of the USDA dataset by providing data-driven alternatives to current imputation methods. Success in this endeavor would contribute to more accurate nutritional information for research, policy-making, and public health applications.

This project uses a traditional predictive modeling approach centers on a single nutrient. My TA, Robin Liu, suggested matrix completion as an alternative. This method could predict all missing nutrient values at once, rather than one nutrient at a time. However, the single-nutrient focus aligns better with the course requirements and simplifies evaluation, making it easier to assess performance and interpret results. Future work could explore matrix completion to predict multiple nutrients simultaneously, incorporating factors like food types and production methods as additional inputs.

DEPENDENCY SETUP#

Database Connection#

We uploaded the USDA data into PostgreSQL:

Data integrity and consistency: SQL databases ensure reliable data with constraints, relationships, and ACID transactions.

Efficient querying: Indexes and query optimization allow faster filtering and retrieval compared to flat files.

Schema enforcement: Prevents data corruption by enforcing structure and rules.

For the USDA database specifically, PostgreSQL offers additional advantages:

Remote access: Team members can collaborate from anywhere.

Complex queries: Easy handling of relationships and multi-table joins.

Better performance: Handles large datasets efficiently.

Data validation: Maintains referential integrity to prevent errors.

These benefits make PostgreSQL a better choice than flat files or isolated server storage.

import pandas as pd

from sqlalchemy import create_engine

from sqlalchemy.types import String

import seaborn as sns

import matplotlib.pyplot as plt

import numpy as np

from dotenv import load_dotenv

import os

RANDOM_SEED = 42

use_best_params=True

load_dotenv()

PGPASS = os.getenv('PGPASSWORD')

db_params = {

'host': 'aws-0-us-east-1.pooler.supabase.com',

'database': 'postgres',

'user': 'postgres.tcfushkpetfaqsorgwww',

'password': PGPASS,

'port': '6543'

}

engine = create_engine(f"postgresql://{db_params['user']}:{db_params['password']}@{db_params['host']}:{db_params['port']}/{db_params['database']}")

Selective Load File#

Some operations (like database setup, etc) are carried out using bash and sql scripts.

The following function loads the important parts of these for display:

def selective_load_file(filename):

"""

Read and return the contents of a file, removing SQL and bash style comments

until encountering '**SUMMARY**'.

Args:

filename (str): Path to the file to be read

Returns:

str: Processed contents of the file

Raises:

FileNotFoundError: If the specified file doesn't exist

IOError: If there's an error reading the file

"""

try:

with open(filename, 'r') as file:

lines = file.readlines()

processed_lines = []

found_summary = False

for line in lines:

# Check for summary marker

if '**SUMMARY**' in line:

found_summary = True

# Before summary: remove comments

if not found_summary:

# Skip empty lines or lines that are only whitespace

if not line.strip():

continue

# Skip SQL style comments (--) and bash style comments (#)

if line.strip().startswith('--') or line.strip().startswith('#'):

continue

processed_lines.append(line)

return ''.join(processed_lines)

except FileNotFoundError:

print(f"Error: File '{filename}' not found")

except IOError as e:

print(f"Error reading file: {e}")

Dataframe Cache#

Some dataframe construction / database fetch operations can take a long time.

We wrap time consuming operations using the following function:

import os

import pandas as pd

def read_df_from_cache_or_create(key: str, createdf = None) -> pd.DataFrame:

"""

Returns a DataFrame either from cache or creates it using the provided function.

The supplied function may load the dataframe from a remote database or it may create it in some other way.

If createdf is None, any existing cache for the given key is cleared.

Parameters:

key (str): Cache key to identify the DataFrame

createdf (callable, optional): Function that returns a pandas DataFrame.

If None, clears cache for the given key.

Returns:

pd.DataFrame: The cached or newly created DataFrame.

Returns None if createdf is None (cache clearing mode).

"""

# Ensure cache directory exists

os.makedirs('_cache', exist_ok=True)

# Construct cache file path

cache_path = os.path.join('_cache', f'{key}.df')

# If createdf is None, clear cache and return

if createdf is None:

if os.path.exists(cache_path):

try:

os.remove(cache_path)

print(f"Cache cleared for key: {key}")

except Exception as e:

print(f"Error clearing cache for key {key}: {e}")

return None

# Check if cache file exists

if os.path.exists(cache_path):

try:

# Load from cache

return pd.read_pickle(cache_path)

except Exception as e:

print(f"Error reading cache file: {e}")

# If there's an error reading cache, proceed to create new DataFrame

# Create new DataFrame

df = createdf()

# Validate that we got a DataFrame

if not isinstance(df, pd.DataFrame):

raise ValueError("createdf function must return a pandas DataFrame")

try:

# Save to cache

df.to_pickle(cache_path)

except Exception as e:

print(f"Warning: Could not save to cache: {e}")

return df

Run Once Tracker#

Some operations (winsorization, etc) must not run more than once on the same data.

We wrap such run once operations using the following function:

class RunOnceTracker:

_executed_keys = set()

def run_once(key=None, target=None):

"""

Executes the target function only if the key hasn't been used before.

If key is None, clears all tracked keys.

If target is None, clears just the specified key.

Args:

key: Unique identifier for this execution (string or hashable type), or None to clear all

target: Function to execute, or None to clear the specified key

Returns:

Result from target() if executed, None if skipped or clearing

"""

# Case 1: Clear all keys

if key is None:

RunOnceTracker._executed_keys.clear()

print("Cleared all execution keys")

return None

# Case 2: Clear specific key

if target is None:

if key in RunOnceTracker._executed_keys:

RunOnceTracker._executed_keys.remove(key)

print(f"Cleared execution key: '{key}'")

else:

print(f"Key '{key}' was not found in execution tracking")

return None

# Case 3: Normal execution check

if key in RunOnceTracker._executed_keys:

print(f"run_once() key '{key}' has already been used. No action taken.")

return None

# Case 4: Execute target function and store key

result = target()

RunOnceTracker._executed_keys.add(key)

return result

PRIMARY DATASET: USDA’s Food Nutrition Database#

Citation and URL#

The US Department of Agriculture (USDA) maintains a comprehensive database of food nutrient information, documenting the nutritional content (proteins, fats, vitamins, minerals, etc.) of thousands of food items.

Sem USDA National Nutrient Database (primary dataset)

The USDA National Nutrient Database for Standard Reference provides detailed nutrient content information on various foods, compiled by the U.S. Department of Agriculture’s Agricultural Research Service. The main data table links foods to specific nutrient quantities, i.e., “Food {F} contains {A} amount of nutrient {N}.”

Haytowitz, David B.; Ahuja, Jaspreet K.C.; Wu, Xianli; Somanchi, Meena; Nickle, Melissa; Nguyen, Quyen A.; Roseland, Janet M.; Williams, Juhi R.; Patterson, Kristine Y.; Li, Ying; Pehrsson, Pamela R. (2019). USDA National Nutrient Database for Standard Reference, Legacy Release. Nutrient Data Laboratory, Beltsville Human Nutrition Research Center, ARS, USDA. https://doi.org/10.15482/USDA.ADC/1529216

@misc{haytowitz2019usda,

title = {{USDA National Nutrient Database for Standard Reference, Legacy Release}},

author = {Haytowitz, David B. and Ahuja, Jaspreet K.C. and Wu, Xianli and Somanchi, Meena and Nickle, Melissa and Nguyen, Quyen A. and Roseland, Janet M. and Williams, Juhi R. and Patterson, Kristine Y. and Li, Ying and Pehrsson, Pamela R.},

year = {2019},

publisher = {{Nutrient Data Laboratory, Beltsville Human Nutrition Research Center, ARS, USDA}},

url = {https://catalog.data.gov/dataset/usda-national-nutrient-database-for-standard-reference-legacy-release-d1570},

doi = {10.15482/USDA.ADC/1529216}

}

This USDA database is a key resource for nutritional information, offering detailed data on approximately 150 nutrients across roughly 7,800 food items. Nutrients include vitamins, minerals, macronutrients, and other compounds essential for health. However, the database has a significant limitation: only 31% of possible food-nutrient pairings are directly measured, 24% are estimates, and 45% are missing entirely. This issue creates challenges in using the USDA database for accurate dietary analysis and research.

Database Setup#

We start by converting and importing the USDA National Nutrient Database (Legacy Release) from its native Access database format (.accdb) into a PostgreSQL database hosted on Supabase.

There are several steps:

download and unzip the source USDA database files

use mdbtools to extract table schemas and data.

convert the Access database schema to PostgreSQL-compatible data types,

copy all table data using CSV as an intermediate format, and finally

run additional SQL scripts to create indexes, views, and tables for semantic embeddings

Our bash script includes connection handling for Supabase and ppsql wrapper function for database access:

## DOWNLOAD SR-Leg_DB.zip from https://agdatacommons.nal.usda.gov/articles/dataset/USDA_National_Nutrient_Database_for_Standard_Reference_Legacy_Release/24661818

## UNZIP to create SR_Legacy.accdb

function ppsql() {

(

. .env

# export PGPASSWORD=XXXXXXXXX

psql \

-h aws-0-us-east-1.pooler.supabase.com \

-p 6543 \

-d postgres \

-U postgres.tcfushkpetfaqsorgwww \

"$@"

)

} ; export -f ppsql

apt install mdbtools

mdb-tables SR_Legacy.accdb \

| tr " " "\n" \

| grep -v '^$' \

| while read t ; do echo "drop table if exists $t cascade;" ; done \

| ppsql

# s/Text(\(\d+\))?/VARCHAR(255)/g;

mdb-schema SR_Legacy.accdb \

| tr -d '[]' \

| perl -pe '

s/Text/VARCHAR/g;

s/Long Integer/INTEGER/g;

s/Integer/INTEGER/g;

s/Single/REAL/g;

s/Double/DOUBLE PRECISION/g;

s/DateTime/TIMESTAMP/g;

s/Currency/DECIMAL(19,4)/g;

s/Yes\/No/BOOLEAN/g;

s/Byte/SMALLINT/g;

s/Memo/TEXT/g;

' \

| tee schema.sql \

| ppsql

mdb-tables SR_Legacy.accdb \

| tr " " "\n" \

| grep -v '^$' \

| while read table ; do

echo $table

mdb-export SR_Legacy.accdb $table \

| ppsql -c "

COPY $table

-- (nutr_no, units, tagname, nutrdesc, num_dec, sr_order)

FROM STDIN

WITH (

FORMAT CSV,

HEADER TRUE,

DELIMITER ',',

QUOTE '\"'

);"

done

cat index_0011.sql | ppsql # link and index tables

cat iron_foods_0012.sql | psql # create iron foods view

cat documents_0010.sql | ppsql # documents table stores embeddings

cat einput_0010.sql | ppsql # einput view stores values that still need embeddings

The setup performed by the referenced *.sql files is explained in the sections that follow.

Data Selection: Iron Nutrient Foods and Peer Nutrients#

USDA’s database has a dozen tables with parent child relationships explained in a SR-Legacy_Doc.pdf that comes with the database.

We created the a data view to focus our investigation on a managable data subset:

Food Nutrient Rows#

The iron_foods view tracks nutrient content of foods using a narrow format, where:

Each row represents a unique food-nutrient pair (F,N)

food_id and food_name refer to a 100g edible portion of some food N

nutrient_id and nutrient_name refer to some nutirent N

For each (F,N) pair, USDA records:

The nutrient content value (nutrient_value)

The units (unit_of_measure) for nutrient_value

How the nutrient_value was determined (source_type)

If

source_type = 1, the nutrient_value was measured by USDA.If

source_type in (4, 7, 8, 9)then the nutrient_value is a calculated estimate (USDA imputation, etc).

query = """

SELECT *

FROM iron_foods

"""

food_nutrient_rows = read_df_from_cache_or_create(

"food_nutrient_rows",

lambda: pd.read_sql_query(query, engine)

)

# print(f"food_nutrient_rows stats:")

unique_food_count = food_nutrient_rows['food_id'].nunique()

print(f"\tfood_id unique count: {unique_food_count}")

print(f"\tfood_name unique count: {food_nutrient_rows['food_name'].nunique()}")

print(f"\tfood_group unique count: {food_nutrient_rows['food_group'].nunique()}")

print(f"\tnutrient_id unique count: {food_nutrient_rows['nutrient_id'].nunique()}")

print(f"\tnutrient_name unique count: {food_nutrient_rows['nutrient_name'].nunique()}")

# print(f"\n")

display(food_nutrient_rows.info())

food_nutrient_rows

food_id unique count: 7713

food_name unique count: 7713

food_group unique count: 25

nutrient_id unique count: 20

nutrient_name unique count: 17

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 131300 entries, 0 to 131299

Data columns (total 12 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 food_id 131300 non-null object

1 food_name 131300 non-null object

2 nutrient_id 131300 non-null object

3 nutrient_name 131300 non-null object

4 unit_of_measure 131300 non-null object

5 nutrient_value 131300 non-null float64

6 standard_error 42762 non-null float64

7 number_of_samples 131300 non-null int64

8 min_value 27931 non-null float64

9 max_value 27931 non-null float64

10 food_group 131300 non-null object

11 source_type 131300 non-null object

dtypes: float64(4), int64(1), object(7)

memory usage: 12.0+ MB

None

| food_id | food_name | nutrient_id | nutrient_name | unit_of_measure | nutrient_value | standard_error | number_of_samples | min_value | max_value | food_group | source_type | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 01092 | Milk, dry, nonfat, instant, with added vitamin... | 617 | Oleic fatty acid | g | 0.156 | 0.004 | 5 | NaN | NaN | Dairy and Egg Products | 1 |

| 1 | 01092 | Milk, dry, nonfat, instant, with added vitamin... | 618 | Linoleic fatty acid | g | 0.018 | 0.004 | 5 | NaN | NaN | Dairy and Egg Products | 1 |

| 2 | 01092 | Milk, dry, nonfat, instant, with added vitamin... | 626 | Palmitoleic fatty acid | g | 0.021 | 0.002 | 5 | NaN | NaN | Dairy and Egg Products | 1 |

| 3 | 01093 | Milk, dry, nonfat, calcium reduced | 203 | Protein | g | 35.500 | NaN | 1 | NaN | NaN | Dairy and Egg Products | 1 |

| 4 | 01093 | Milk, dry, nonfat, calcium reduced | 207 | Ash | g | 7.600 | NaN | 1 | NaN | NaN | Dairy and Egg Products | 1 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 131295 | 01092 | Milk, dry, nonfat, instant, with added vitamin... | 309 | Zinc, Zn | mg | 4.410 | 0.124 | 10 | NaN | NaN | Dairy and Egg Products | 1 |

| 131296 | 01092 | Milk, dry, nonfat, instant, with added vitamin... | 312 | Copper, Cu | mg | 0.041 | NaN | 0 | NaN | NaN | Dairy and Egg Products | 1 |

| 131297 | 01092 | Milk, dry, nonfat, instant, with added vitamin... | 404 | Thiamin | mg | 0.413 | 0.006 | 31 | NaN | NaN | Dairy and Egg Products | 1 |

| 131298 | 01092 | Milk, dry, nonfat, instant, with added vitamin... | 405 | Riboflavin | mg | 1.744 | 0.040 | 31 | NaN | NaN | Dairy and Egg Products | 1 |

| 131299 | 01092 | Milk, dry, nonfat, instant, with added vitamin... | 406 | Niacin | mg | 0.891 | 0.042 | 29 | NaN | NaN | Dairy and Egg Products | 1 |

131300 rows × 12 columns

Nutrient Units of Measure#

For any given nutrient and food in the USDA database, nutrient content is reported per 100 grams of the edible portion of that food.

The units of nutrient content measurement vary by nutrient type:

Macronutrients (like protein, fat, carbohydrates): measured in grams (g)

Minerals and some vitamins: measured in milligrams (mg)

Trace elements and some vitamins: measured in micrograms (µg)

Certain vitamins (A, D, E): measured in International Units (IU)

For example, in 100g of raw apple (just edible portion with core and pits removed):

Carbohydrates would be reported in grams

Potassium would be reported in milligrams

Vitamin B12 would be reported in micrograms

Vitamin D would be reported in International Units

The table below shows the measurement units used for nutrients in our study.

food_nutrient_rows.groupby('nutrient_name')['unit_of_measure'].unique()

nutrient_name

Ash [g]

Calcium, Ca [mg]

Copper, Cu [mg]

Iron, Fe [mg]

Linoleic fatty acid [g]

Magnesium, Mg [mg]

Niacin [mg]

Oleic fatty acid [g]

Palmitoleic fatty acid [g]

Phosphorus, P [mg]

Potassium, K [mg]

Protein [g]

Riboflavin [mg]

Sodium, Na [mg]

Thiamin [mg]

Water [g]

Zinc, Zn [mg]

Name: unit_of_measure, dtype: object

Pivot to Food Rows#

For convience we pivot the narrow food_nutrient_rows dataframe into a wide format food_rows dataframe so that:

Each row represents one unique food

Columns contain information about that food (name, food group, nutrient content, etc.)

def pivot_to_food_rows5(narrow_df):

# selection_mask = (narrow_df['nutrient_name'] == 'Iron, Fe') & (narrow_df['source_type'] == '1')

selection_mask = (narrow_df['nutrient_name'] == 'Iron, Fe')

iron_food_id = narrow_df[selection_mask]['food_id'].unique()

source_type_df = narrow_df[

selection_mask

][['food_id', 'source_type']]

# print("source_type_df")

# with pd.option_context('display.max_rows', 100):

# print(source_type_df.tail(1000))

pivot_df = narrow_df[

narrow_df['food_id'].isin(iron_food_id)

][['food_id', 'food_name', 'food_group', 'nutrient_name', 'nutrient_value']]

wide_df = pivot_df.pivot_table(

index=['food_id', 'food_name', 'food_group'],

columns='nutrient_name',

values='nutrient_value',

aggfunc='first'

).reset_index()

# Join source_type_df with wide_df on food_id column

wide_df = wide_df.merge(source_type_df, on='food_id', how='inner')

wide_df.columns.name = None

# first_cols = ['food_id', 'food_name', 'food_group', "iron_source_type"] # Update to use new column name

first_cols = ['food_id', 'food_name', 'food_group', "source_type"]

other_cols = sorted([col for col in wide_df.columns if col not in first_cols])

wide_df = wide_df[first_cols + other_cols]

return wide_df

food_rows = pivot_to_food_rows5(food_nutrient_rows)

# print(food_rows.shape)

# print(food_rows.info())

food_rows

| food_id | food_name | food_group | source_type | Ash | Calcium, Ca | Copper, Cu | Iron, Fe | Linoleic fatty acid | Magnesium, Mg | ... | Oleic fatty acid | Palmitoleic fatty acid | Phosphorus, P | Potassium, K | Protein | Riboflavin | Sodium, Na | Thiamin | Water | Zinc, Zn | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 01001 | Butter, salted | Dairy and Egg Products | 1 | 2.11 | 24.0 | 0.000 | 0.02 | 2.728 | 2.0 | ... | 19.961 | 0.961 | 24.0 | 24.0 | 0.85 | 0.034 | 643.0 | 0.005 | 16.17 | 0.09 |

| 1 | 01002 | Butter, whipped, with salt | Dairy and Egg Products | 1 | 1.62 | 23.0 | 0.010 | 0.05 | 2.713 | 1.0 | ... | 17.370 | 1.417 | 24.0 | 41.0 | 0.49 | 0.064 | 583.0 | 0.007 | 16.72 | 0.05 |

| 2 | 01003 | Butter oil, anhydrous | Dairy and Egg Products | 4 | 0.00 | 4.0 | 0.001 | 0.00 | 2.247 | 0.0 | ... | 25.026 | 2.228 | 3.0 | 5.0 | 0.28 | 0.005 | 2.0 | 0.001 | 0.24 | 0.01 |

| 3 | 01004 | Cheese, blue | Dairy and Egg Products | 1 | 5.11 | 528.0 | 0.040 | 0.31 | 0.536 | 23.0 | ... | 6.622 | 0.816 | 387.0 | 256.0 | 21.40 | 0.382 | 1146.0 | 0.029 | 42.41 | 2.66 |

| 4 | 01005 | Cheese, brick | Dairy and Egg Products | 1 | 3.18 | 674.0 | 0.024 | 0.43 | 0.491 | 24.0 | ... | 7.401 | 0.817 | 451.0 | 136.0 | 23.24 | 0.351 | 560.0 | 0.014 | 41.11 | 2.60 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 7708 | 83110 | Fish, mackerel, salted | Finfish and Shellfish Products | 1 | 13.40 | 66.0 | 0.100 | 1.40 | 0.369 | 60.0 | ... | 4.224 | 1.495 | 254.0 | 520.0 | 18.50 | 0.190 | 4450.0 | 0.020 | 43.00 | 1.10 |

| 7709 | 90240 | Mollusks, scallop, (bay and sea), cooked, steamed | Finfish and Shellfish Products | 4 | 2.97 | 10.0 | 0.033 | 0.58 | 0.014 | 37.0 | ... | 0.053 | 0.015 | 426.0 | 314.0 | 20.54 | 0.024 | 667.0 | 0.012 | 70.25 | 1.55 |

| 7710 | 90480 | Syrup, Cane | Sweets | 1 | 0.86 | 13.0 | 0.020 | 3.60 | 0.000 | 10.0 | ... | 0.000 | 0.000 | 8.0 | 63.0 | 0.00 | 0.060 | 58.0 | 0.130 | 26.00 | 0.19 |

| 7711 | 90560 | Mollusks, snail, raw | Finfish and Shellfish Products | 1 | 1.30 | 10.0 | 0.400 | 3.50 | 0.017 | 250.0 | ... | 0.211 | 0.048 | 272.0 | 382.0 | 16.10 | 0.120 | 70.0 | 0.010 | 79.20 | 1.00 |

| 7712 | 93600 | Turtle, green, raw | Finfish and Shellfish Products | 1 | 1.20 | 118.0 | 0.250 | 1.40 | 0.033 | 20.0 | ... | 0.073 | 0.015 | 180.0 | 230.0 | 19.80 | 0.150 | 68.0 | 0.120 | 78.50 | 1.00 |

7713 rows × 21 columns

Data Source Food Rows#

To show what nutrition infromation the USDA has we will also load a table having rows for every food F and columns for every nutrient N and containing the value -1 if (F,N) has no data, 0 if the USDA has calculated or imputed a value and 1 if the (F,N) combination has been measured.

We created this table in SQL as shown below and will use it later to visualize the contents of the USDA dataset.

WITH nutrient_names AS (

SELECT DISTINCT nutrient_name

FROM food_nutrient_rows

ORDER BY nutrient_name

),

pivot_columns AS (

SELECT string_agg(

format('COALESCE(MAX(CASE

WHEN nutrient_name = %L THEN

CASE

WHEN data_source = ''measured'' THEN 1

WHEN data_source = ''assumed'' THEN 0

END

END), -1) AS "n:%s"',

nutrient_name,

nutrient_name),

', '

) AS columns

FROM nutrient_names

)

SELECT

'DROP VIEW IF EXISTS data_source_food_rows cascade; ' ||

'CREATE VIEW data_source_food_rows AS ' ||

'SELECT food_id, food_name, food_group, ' ||

(SELECT columns FROM pivot_columns) ||

' FROM food_nutrient_rows GROUP BY food_id, food_name, food_group ORDER BY food_id;';

data_source_food_rows = read_df_from_cache_or_create(

"data_source_food_rows",

lambda: pd.read_sql_query("SELECT * FROM data_source_food_rows", engine)

)

data_source_food_rows

| food_id | food_name | food_group | n:Adrenic fatty acid | n:Alanine | n:Alcohol, ethyl | n:Alpha-linolenic fatty acid (ALA) | n:Arachidic fatty acid | n:Arachidonic fatty acid | n:Arachidonic fatty acid (ARA) | ... | n:Vitamin C, total ascorbic acid | n:Vitamin D | n:Vitamin D2 (ergocalciferol) | n:Vitamin D3 (cholecalciferol) | n:Vitamin D (D2 + D3) | n:Vitamin E, added | n:Vitamin E (alpha-tocopherol) | n:Vitamin K (phylloquinone) | n:Water | n:Zinc, Zn | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 01001 | Butter, salted | Dairy and Egg Products | -1 | 1 | 0 | 1 | 1 | 1 | -1 | ... | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 |

| 1 | 01002 | Butter, whipped, with salt | Dairy and Egg Products | 1 | 1 | 0 | 1 | 1 | 1 | -1 | ... | 1 | 0 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 |

| 2 | 01003 | Butter oil, anhydrous | Dairy and Egg Products | -1 | 0 | 0 | -1 | -1 | 1 | -1 | ... | 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 0 |

| 3 | 01004 | Cheese, blue | Dairy and Egg Products | -1 | 1 | 0 | -1 | -1 | 1 | -1 | ... | 1 | 0 | -1 | 0 | 1 | 0 | 0 | 0 | 1 | 1 |

| 4 | 01005 | Cheese, brick | Dairy and Egg Products | -1 | 1 | 0 | -1 | -1 | 1 | -1 | ... | 1 | 0 | -1 | 0 | 1 | 0 | 0 | 0 | 1 | 1 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 7788 | 83110 | Fish, mackerel, salted | Finfish and Shellfish Products | -1 | -1 | 0 | -1 | -1 | 0 | -1 | ... | 0 | 0 | -1 | 0 | 1 | 0 | 0 | 0 | 1 | 0 |

| 7789 | 90240 | Mollusks, scallop, (bay and sea), cooked, steamed | Finfish and Shellfish Products | 0 | 0 | 0 | -1 | 0 | 0 | -1 | ... | 0 | 0 | -1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 7790 | 90480 | Syrup, Cane | Sweets | -1 | -1 | 0 | -1 | -1 | 0 | -1 | ... | 1 | 0 | -1 | -1 | 0 | 0 | 0 | 0 | 1 | 1 |

| 7791 | 90560 | Mollusks, snail, raw | Finfish and Shellfish Products | -1 | -1 | 0 | -1 | -1 | 0 | -1 | ... | 0 | 0 | -1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

| 7792 | 93600 | Turtle, green, raw | Finfish and Shellfish Products | -1 | -1 | 0 | -1 | -1 | 0 | -1 | ... | 0 | 0 | -1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

7793 rows × 147 columns

SECONDARY DATASET: OpenAI’s Embedding#

For every food_name, food_group, and nutrient_name we have OpenAI compute an embedding vector which we save to database table called documents.

Datasource Citation#

OpenAI’s Semantic Embedding (from “text-embedding-3-small” model) is a secondary dataset we will use to augment USDA’s food nutrient dataset:

@misc{openai_text_embedding_3_small,

author = {OpenAI},

title = {OpenAI's Embedding Model text-embedding-3-small},

year = {2024},

note = {Semantic numerical representation of food\_name and food\_group},

howpublished = {\url{https://platform.openai.com/docs/guides/embeddings}},

}

This augmentation offers a high dimensional spatial representation for textual attributes like food_name, food_group and nutrient name.

Database Setup#

We prepare our database as follows:

Database Setup:

Enable the

vectorextension for PostgreSQL to handle embeddingsCreate a

documentstable that stores text content and its 1536-dimensional vector representation (embedding)

Performance Optimization:

Create an IVF-Flat index for fast similarity searches

Use cosine similarity as the distance metric

Set 400 clusters (based on square root of expected rows * 4) for the index organization

IVF-Flat divides vectors into clusters to speed up searches

Search Function:

Create a

match_documentsfunction that:Take a query vector, similarity threshold, and how many matches to return

Use the cosine distance operator (

<=>) to find similar documentsReturn documents ordered by similarity, filtering out low-similarity matches

Return the document ID, content, and calculated similarity score

This one time database setup allows SQL queries to efficiently find food terms that are conceptually similar, rather than just exact text matches.

create extension vector;

drop table if exists documents cascade;

create table documents (

id bigserial primary key,

content text,

embedding vector(1536)

);

create index on documents using ivfflat (embedding vector_cosine_ops)

with

(lists = 400); -- sqrt(7777)*4

-- Each distance operator requires a different type of index.

-- We expect to order by cosine distance, so we need vector_cosine_ops index.

-- A good starting number of lists is 4 * sqrt(table_rows):

create or replace function match_documents (

query_embedding vector(1536),

match_threshold float,

match_count int

)

returns table (

id bigint,

content text,

similarity float

)

language sql stable

as $$

select

documents.id,

documents.content,

1 - (documents.embedding <=> query_embedding) as similarity

from documents

where documents.embedding <=> query_embedding < 1 - match_threshold

order by documents.embedding <=> query_embedding

limit match_count;

$$;

Embedding Inputs#

Computation of embeddings is a one time process that reads strings that still need embedding from a database einput view:

CREATE VIEW einput AS WITH dummy AS

(SELECT 1),

fnames AS

(SELECT food_name AS einput

FROM iron_foods),

nnames AS

(SELECT nutrient_name AS einput

FROM iron_foods),

gnames AS

(SELECT food_group AS einput

FROM iron_foods),

tunion AS

(SELECT einput

FROM fnames

UNION SELECT einput

FROM nnames

UNION SELECT einput

FROM gnames)

SELECT einput

FROM tunion

WHERE TRUE

AND einput NOT IN

(SELECT content

FROM documents);

The einput view creates a list of text entries to be added to our documents table:

It pulls three types of text strings from the

iron_foodsview we looked at earlier:Food names (e.g., “Spinach, raw”)

Nutrient names (e.g., “Iron, Fe”)

Food group names (e.g., “Vegetables and Vegetable Products”)

It combines all these strings into one list using UNION

Finally, it filters out any strings that already exist in a

documentstable

The end result is an list of food names, nutrient names, or food group names from the iron_foods view that haven’t yet been added the document table.

Embedding API ETL#

Setup:

Establish connections to Supabase (a PostgreSQL database service) and OpenAI’s API

Extract (

get_documents):Retrieve food-related terms from the

einputview we looked at earlierUse pagination to handle large datasets (100 records at a time)

Include retry logic to handle potential API failures

Transform (

get_embedding):Take each food-related term

Send it to OpenAI’s

text-embedding-3-smallmodelGet back a numerical vector representation (embedding) of the term

Load:

Store each term and its embedding in the

documentstableThis is why the previous

einputview filtered against thedocumentstable - to avoid duplicate processing

Once this process completes our database is loaded with embedding vectors:

import os

from supabase import create_client, Client

supabase_url = "https://tcfushkpetfaqsorgwww.supabase.co"

supabase_key = "XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX"

# Initialize Supabase client

supabase: Client = create_client(supabase_url, supabase_key)

import openai

from google.colab import userdata

api_key = userdata.get('OPENAI_API_KEY')

client = openai.OpenAI(api_key=api_key)

def get_embedding(text, model="text-embedding-3-small"):

text = text.replace("\n", " ")

return client.embeddings.create(input = [text], model=model).data[0].embedding

def get_documents():

all_documents = []

page_size = 100 # Reduced from 1000 to 100 to process smaller chunks

start = 0

max_retries = 3

while True:

retry_count = 0

while retry_count < max_retries:

try:

response = supabase.table('einput')\

.select('einput')\

.range(start, start + page_size - 1)\

.execute()

documents = [record['einput'] for record in response.data]

all_documents.extend(documents)

# If we got less than a full page, we're done

if len(documents) < page_size:

return all_documents

# Move to next page

start += page_size

break # Break retry loop on success

except Exception as e:

retry_count += 1

if retry_count == max_retries:

print(f"Failed after {max_retries} attempts. Error: {str(e)}")

# Return what we have so far

return all_documents

print(f"Retry {retry_count} after error: {str(e)}")

time.sleep(2) # Wait 2 seconds before retrying

return all_documents

def generate_embeddings():

documents = get_documents() # Load documents to process

for document in documents:

embedding = get_embedding(document)

data = {

"content": document,

"embedding": embedding

}

supabase.table("documents").insert(data).execute()

generate_embeddings()

Load Embeddings#

We retrieve embeddings from our database in smaller, manageable batches through a technique called pagination, which helps prevent system overloads and timeouts. This ensures efficient data handling and avoids overwhelming the server with too much data at once.

def get_embeddings(engine, batch_size=1000):

"""

Retrieve all embeddings using pagination to avoid timeout.

"""

count_query = "SELECT COUNT(*) FROM documents"

total_records = pd.read_sql_query(count_query, engine).iloc[0, 0]

dfs = []

# Paginate through the results

for offset in range(0, total_records, batch_size):

query = f"""

SELECT content, embedding::text

FROM documents

ORDER BY id -- Ensure consistent ordering

LIMIT {batch_size}

OFFSET {offset};

"""

try:

batch_df = pd.read_sql_query(query, engine)

dfs.append(batch_df)

# Optional: Print progress

print(f"Fetched {min(offset + batch_size, total_records)} of {total_records} records")

except Exception as e:

print(f"Error fetching batch at offset {offset}: {str(e)}")

continue

# Combine all batches into a single DataFrame

if not dfs:

raise Exception("No data was retrieved from the database")

final_df = pd.concat(dfs, ignore_index=True)

return final_df

# doc_embed = get_embeddings(engine, batch_size=1000)

doc_embed = read_df_from_cache_or_create(

"doc_embed",

lambda: get_embeddings(engine, batch_size=1000)

)

# engine.dispose()

# doc_embed.shape

doc_embed

| content | embedding | |

|---|---|---|

| 0 | 14:0 | [0.009029812,-0.063059315,-0.019838428,0.01334... |

| 1 | 16:0 | [0.010453682,-0.03951149,-0.00312925,0.0124210... |

| 2 | 16:1 undifferentiated | [0.012321927,0.009563978,0.00871419,0.02277431... |

| 3 | 18:0 | [-0.022437058,-0.044308595,-0.031584367,0.0210... |

| 4 | 18:1 undifferentiated | [-0.015148849,0.01356421,-0.012176703,0.027537... |

| ... | ... | ... |

| 7964 | Glycine | [-0.03623759,-0.004581659,-0.037606895,0.02060... |

| 7965 | Eicosadienoic fatty acid | [0.006372733,0.0032185817,-0.017489873,0.02574... |

| 7966 | Alcoholic Beverage, wine, table, red, Gamay | [-0.046902906,-0.014858377,-0.01236847,-0.0092... |

| 7967 | Lignoceric fatty acid | [-0.032019954,0.024238927,-0.06936377,-0.01990... |

| 7968 | Hydroxyproline | [-0.017597416,-0.012690569,0.012697034,0.04271... |

7969 rows × 2 columns

Merge Embeddings#

We merge embedding vector representations to our USDA nutritional data as follows:

def split_emb(df):

df = df.copy()

df['embedding'] = df['embedding'].apply(lambda x:

np.array(eval("x.strip('[]').split(',')"), dtype=np.float32)

)

return df

def merge_foods_embeddings(df, raw_embeddings):

merged_df = df.merge(

raw_embeddings,

left_on='food_name', # For the present study only food name embedings are used.

right_on='content',

how='inner'

)

# Drop the duplicate content column since it's the same as food_name

merged_df = merged_df.drop(columns=['content'])

return merged_df

doc_embed2=split_emb(df=doc_embed)

print(f"Data shape BEFORE embedding: {food_rows.shape}")

food_rows = merge_foods_embeddings(food_rows, doc_embed2)

print(f"Data shape AFTER embedding: {food_rows.shape}")

# food_rows.info()

# food_rows

Data shape BEFORE embedding: (7713, 21)

Data shape AFTER embedding: (7713, 22)

Each embedding vector is an object so when embedding vectors are merged our dataset gains just one extra feature column.

EXPLORATORY DATA ANALYSIS#

Data Codebook#

The entire USDA Food Nutrition database is too complex to cover here.

food_id: 5-digit NDB number that uniquely identifies a food item (leading zero is lost when this field is defined as numeric)

food_name: 200-character description of food item

food_group: Name of food group, categorized as follows:

- Vegetables and Vegetable Products

- Beef Products

- Lamb, Veal, and Game Products

- Baked Products

- Poultry Products

- Fruits and Fruit Juices

- Pork Products

- Fast Foods

- Finfish and Shellfish Products

- Dairy and Egg Products

- Soups, Sauces, and Gravies

- Legumes and Legume Products

- Beverages

- Cereal Grains and Pasta

- Baby Foods

- Nut and Seed Products

- Sausages and Luncheon Meats

- Sweets

- Snacks

- Restaurant Foods

- American Indian/Alaska Native Foods

- Fats and Oils

- Meals, Entrees, and Side Dishes

- Spices and Herbs

- Breakfast Cereals

Nutrient Name and units of measure:

- Calcium, Ca:['mg']

- Carbohydrate, by difference:['g']

- Cholesterol:['mg']

- Copper, Cu:['mg']

- Fatty acids, total monounsaturated:['g']

- Fatty acids, total polyunsaturated:['g']

- Fatty acids, total saturated:['g']

- Fiber, total dietary:['g']

- Iron, Fe:['mg']

- Linoleic fatty acid:['g']

- Magnesium, Mg:['mg']

- Niacin:['mg']

- Oleic fatty acid:['g']

- Phosphorus, P:['mg']

- Potassium, K:['mg']

- Protein:['g']

- Riboflavin:['mg']

- Sodium, Na:['mg']

- Thiamin:['mg']

- Total lipid (fat):['g']

- Vitamin A, IU:['IU']

- Vitamin B-12:['µg']

- Vitamin B-6:['mg']

- Vitamin C, total ascorbic acid:['mg']

- Zinc, Zn:['mg']

Histograms of Nutrient Content#

def plot_iron_distribution_log(df):

# plt.figure(figsize=(12, 6))

plt.figure(figsize=(15, 5))

iron_values = df['Iron, Fe'].dropna()

# Calculate log-spaced bins, adding epsilon to handle zeros

eps = 1e-3

min_value = max(iron_values.min(), eps) # Ensure minimum is positive

max_value = iron_values.max()

bins = np.logspace(np.log10(min_value), np.log10(max_value), 23)

sns.histplot(data=iron_values,

bins=bins,

color='darkred',

alpha=0.6,

kde=False)

plt.title('Histogram of Measured Iron Content for USDA Tracked Foods', size=14, pad=15)

plt.xlabel('Iron Content (mg/100g)', size=12)

plt.ylabel('Frequency', size=12)

plt.xscale('log')

plt.yscale('log')

stats_text=sdfd = f'Mean: {iron_values.mean():.2f} mg\n'

stats_text += f'Median: {iron_values.median():.2f} mg\n'

stats_text += f'Std Dev: {iron_values.std():.2f} mg'

# Position text box in upper right

plt.text(0.95, 0.95, stats_text,

transform=plt.gca().transAxes,

verticalalignment='top',

horizontalalignment='right',

bbox=dict(boxstyle='round', facecolor='white', alpha=0.8))

plt.tight_layout()

plt.show()

plot_iron_distribution_log(food_rows)

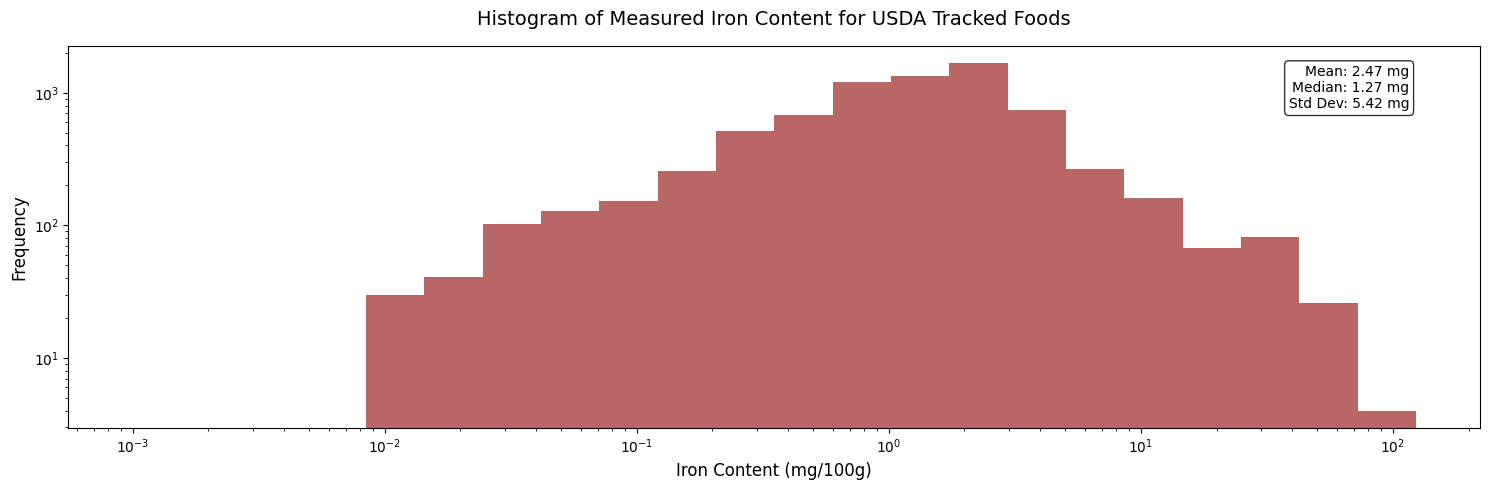

This histogram shows the distribution of iron content across USDA tracked foods, with values displayed on a logarithmic scale.

A few key observations:

The distribution is right-skewed, with most foods containing relatively low iron content (median 1.27 mg/100g)

There’s significant spread in the data (standard deviation 5.42 mg)

The mean (2.47 mg) being higher than the median indicates some foods with very high iron content pulling the average up

Most foods appear to contain between 0.1 and 10 mg of iron per 100g

There are relatively few foods with very high iron content (>20 mg/100g)

import matplotlib.pyplot as plt

import seaborn as sns

import numpy as np

from itertools import product

def plot_nutrient_matrix(df, nutrient_limit=3, food_group_limit=3, figsize=(20, 20)):

# Sort by frequency

nutrient_counts = df['nutrient_name'].value_counts()

food_group_counts = df['food_group'].value_counts()

nutrients = nutrient_counts.index.tolist()

food_groups = food_group_counts.index.tolist()

# Apply limits if specified

if nutrient_limit:

nutrients = nutrients[:nutrient_limit]

if food_group_limit:

food_groups = food_groups[:food_group_limit]

fig, axes = plt.subplots(len(food_groups), len(nutrients),

figsize=figsize,

squeeze=False)

# plt.suptitle('Nutrient Distributions by Food Group',

# size=16, y=0.95)

plt.subplots_adjust(hspace=0.4, wspace=0.4)

for (i, fg), (j, nut) in product(

enumerate(food_groups),

enumerate(nutrients)

):

ax = axes[i, j]

mask = (df['food_group'] == fg) & (df['nutrient_name'] == nut)

values = df[mask]['nutrient_value'].dropna()

unit = df[df['nutrient_name'] == nut]['unit_of_measure'].iloc[0]

if len(values) > 0:

# eps = 1e-3

eps = 0.005

min_value = max(values.min(), eps)

max_value = values.max()

bins = np.logspace(np.log10(min_value), np.log10(max_value), 37)

sns.histplot(data=values,

bins=bins,

color='darkred',

alpha=0.7,

kde=False,

ax=ax)

ax.set_xscale('log')

ax.set_yscale('log')

# ax.set_xlim(1e-3, 1e4)

ax.set_xlim(0.004, 5000)

ax.set_ylim(eps, 1000)

if i != len(food_groups)-1:

ax.set_xlabel('')

if j != 0:

ax.set_ylabel('')

# stats_text = f'n={len(values)}\nmed={values.median():.1f}'

# ax.text(0.95, 0.95, stats_text,

# transform=ax.transAxes,

# verticalalignment='top',

# horizontalalignment='right',

# fontsize=8,

# bbox=dict(facecolor='white',

# alpha=0.8,

# edgecolor='none'))

ax.grid(True, alpha=0.3)

ax.tick_params(labelsize=8)

for ax, col in zip(axes[0], nutrients):

ax.set_title(col, rotation=45, ha='left', size=10)

for ax, row in zip(axes[:,0], food_groups):

ax.set_ylabel(row, rotation=45, ha='right', size=10)

plt.tight_layout()

return fig, axes

# # Usage:

fig, axes = plot_nutrient_matrix(food_nutrient_rows, nutrient_limit=12, food_group_limit=16)

plt.show()

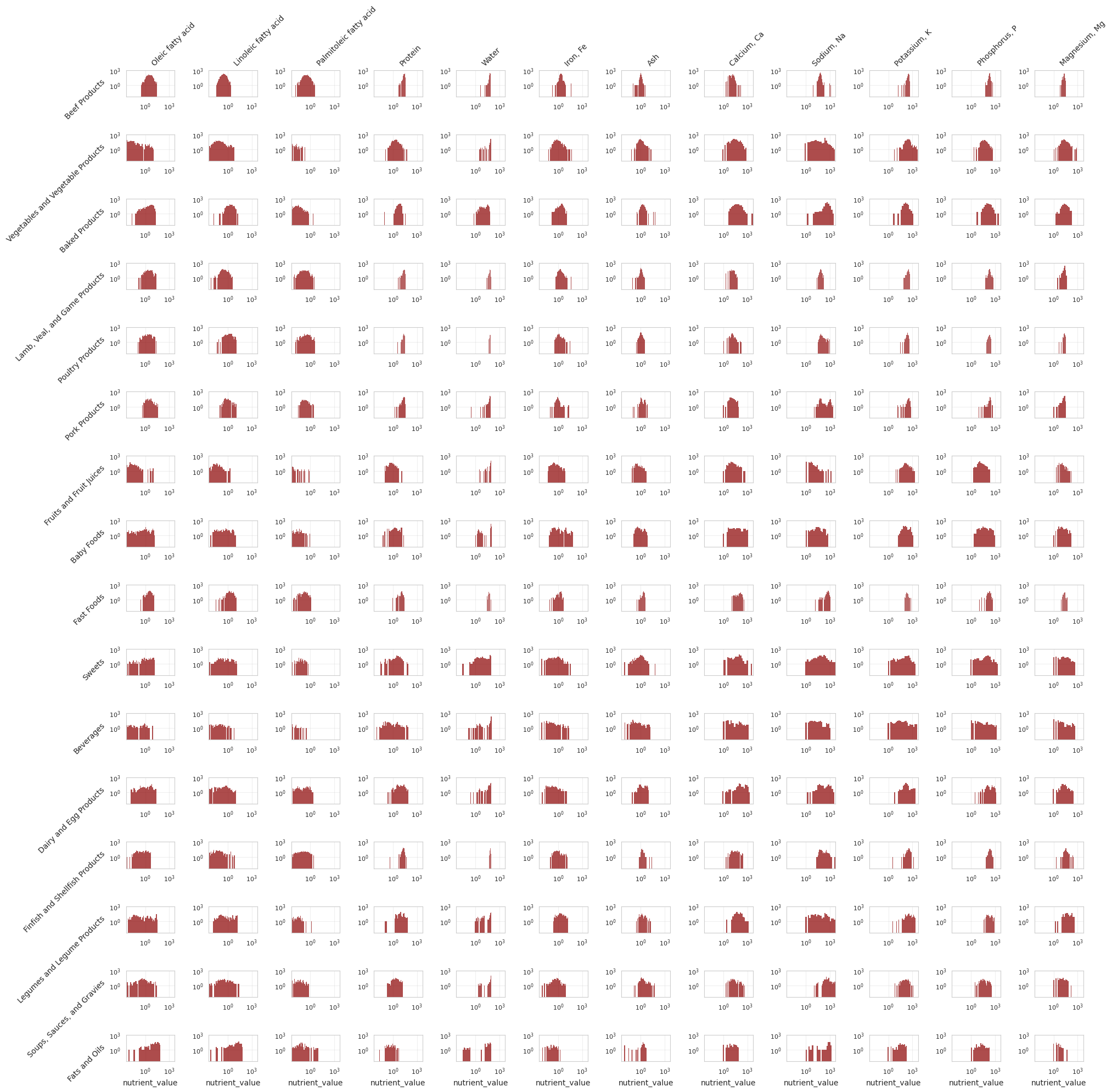

The single histogram above can be placed into a grid of histograms with:

Nutrient types as column headers (shown rotated 45 degrees)

Food group categories as row labels (shown rotated 45 degrees)

Each individual plot contains:

A histogram with darkred semi-transparent bars

Consistent axis scaling and limits across all plots.

Logarithmic scales on both x and y axes

histogram y axis scales by three orders of magnitude

ax.set_ylim(0, 1000)histogram x axis scales by six orders of magnitude

ax.set_xlim(0.004, 5000)histogram x axis units do not change inside a column because each nutrient column shows just one nutrient.

histogram x axis units may change across columns because each row shows different nutrients. See “Nutrient Units of Measure” section (above) for how each nutrient is measured.

The grid allows quick visual comparison across different nutrient-food group combinations, with each histogram showing the distribution of values for that specific pairing. Histogram log scales accommodate the wide range of values present in the data. Because each histgram has the same construction (same fixed scaling and bins) the amount of red color in each visually shows how much data is available at the intersection of each nutrient and food_group.

Looking at the patterns in this nutrient distribution visualization, several interesting features stand out:

Distribution Shapes:

Many nutrients show distinct “spikes” or unimodal distributions within specific food groups

Some distributions are broad and spread across multiple orders of magnitude

Certain combinations show multi-modal patterns, suggesting possible subgroups within food categories

Density Patterns:

Some food groups (like dairy products and egg products) show very concentrated distributions for certain nutrients

Others have more diffuse patterns with long tails, indicating wide variability in nutrient content

Empty or nearly empty plots show that some combinations of nutrient food group combinations have lots of missing data

Scale Variations:

The log-scale histograms reveal that nutrient concentrations often span 3-4 orders of magnitude

Some nutrients show remarkably consistent concentration ranges across different food groups

Others vary dramatically between food groups

Possible explanations for these patterns:

Processing Effects:

Sharp peaks might indicate standardized food processing or fortification

Broader distributions could reflect natural variation in unprocessed foods

Biological Constraints:

Some nutrients may have narrow concentration ranges due to biochemical limitations

Others might vary widely based on growing conditions or animal feed

Measurement/Reporting Factors:

Very precise peaks could reflect standardized reporting rather than actual variation

Some patterns might be artifacts of measurement methods or regulatory requirements

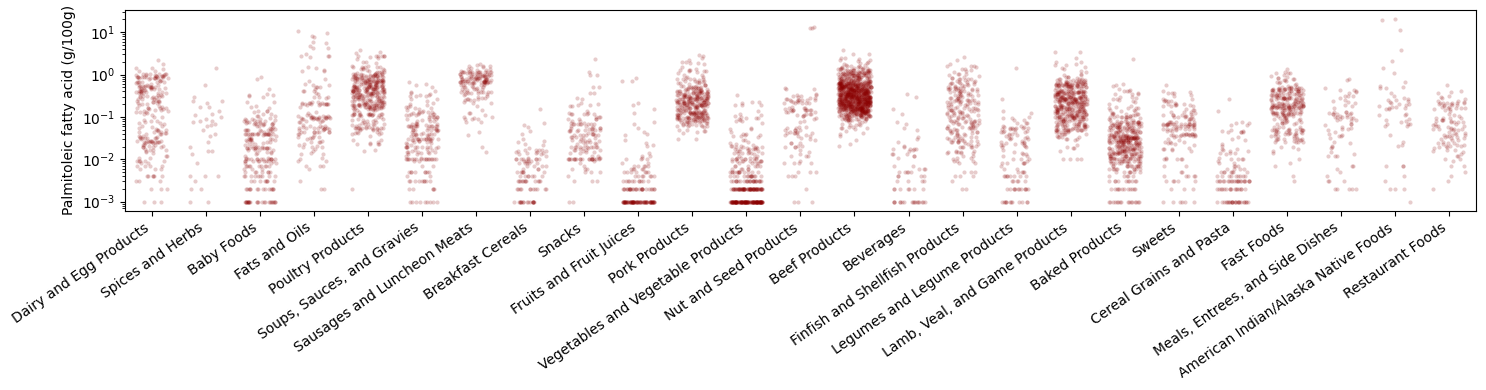

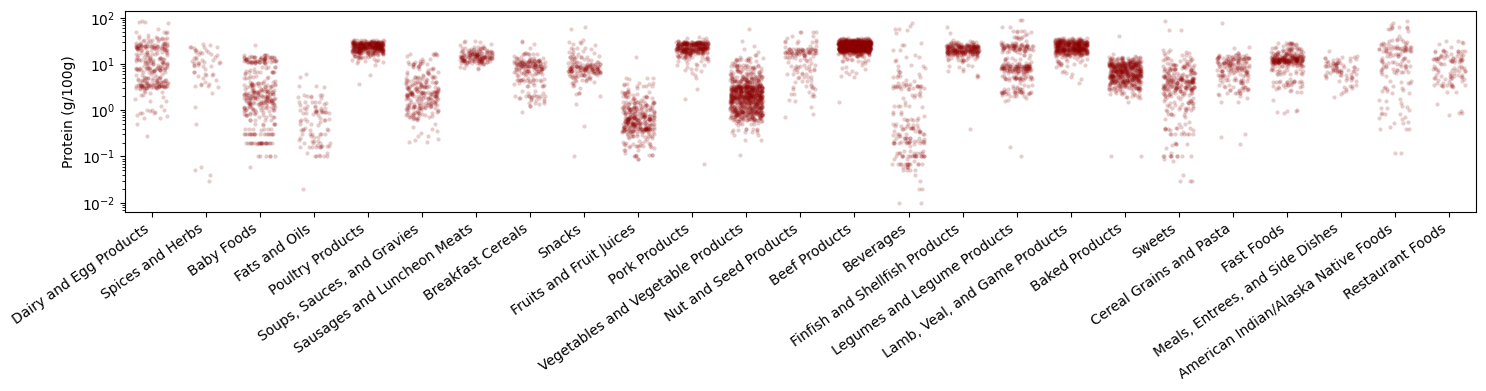

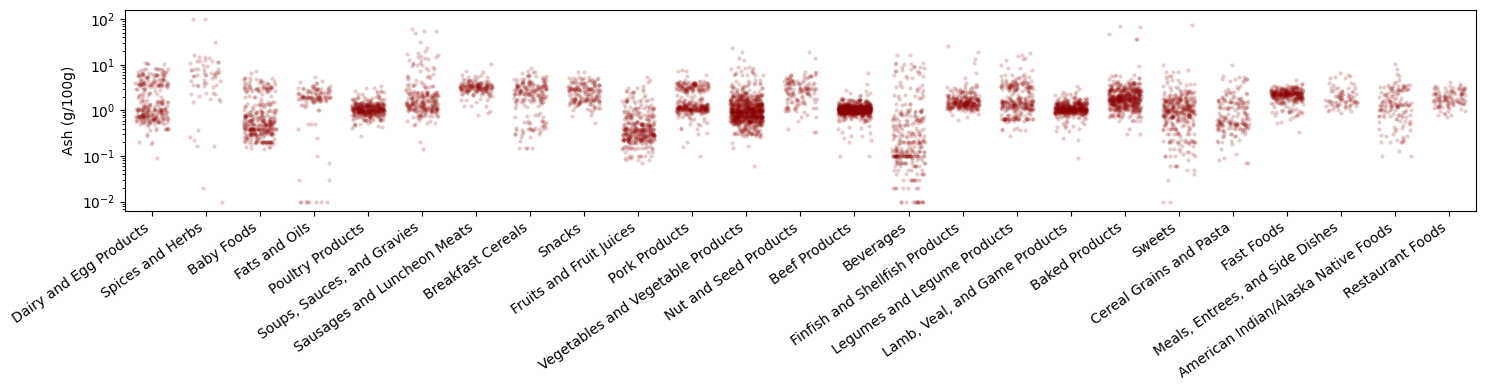

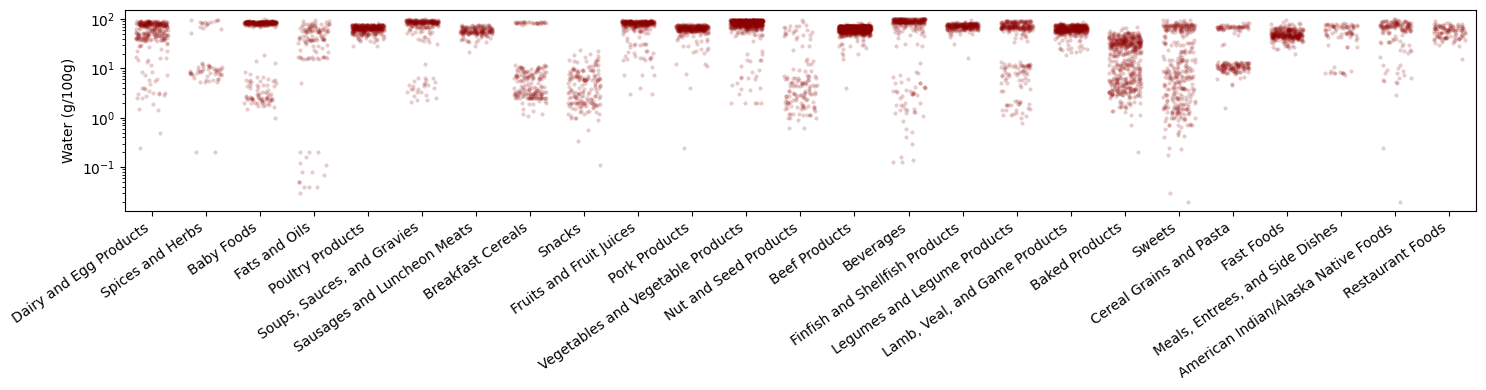

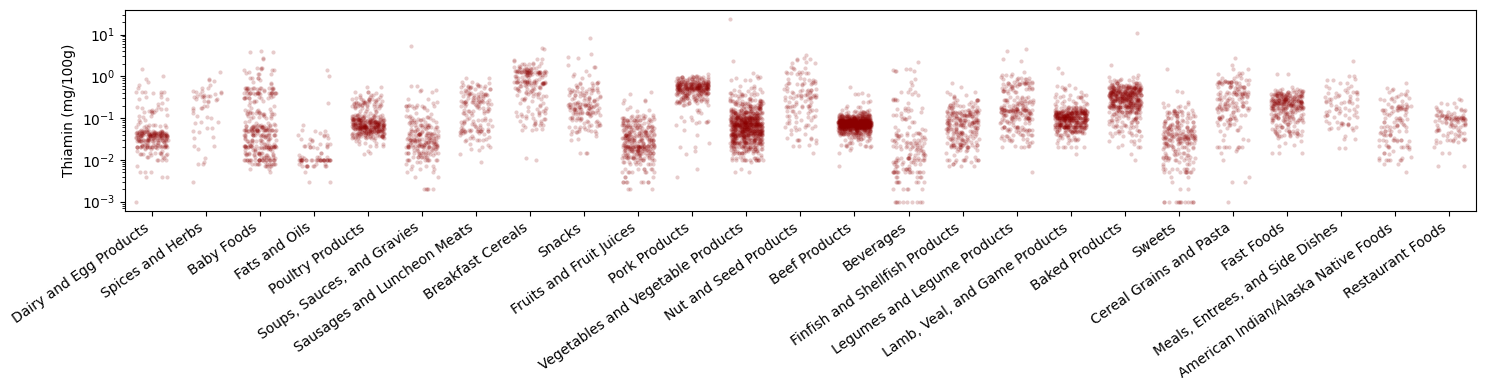

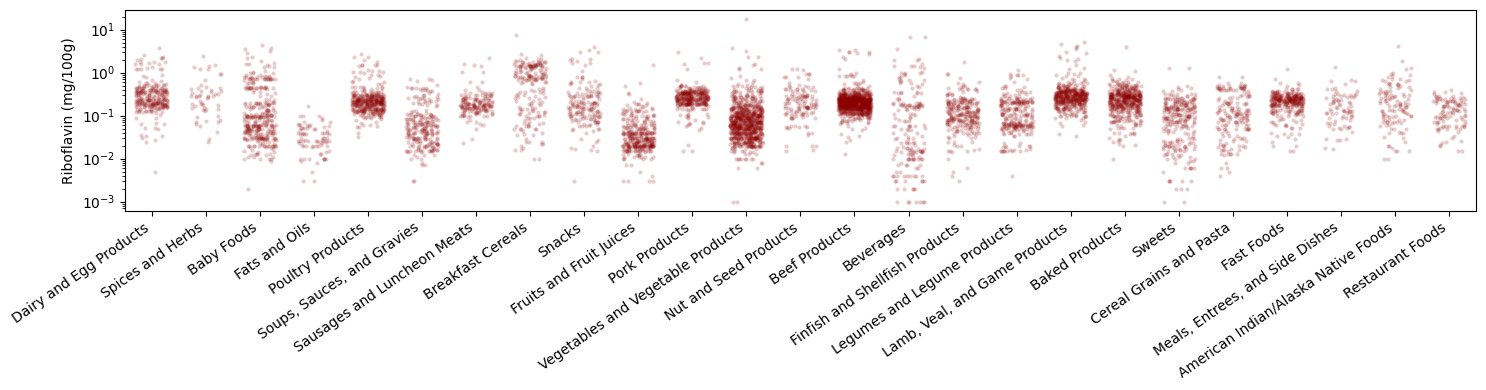

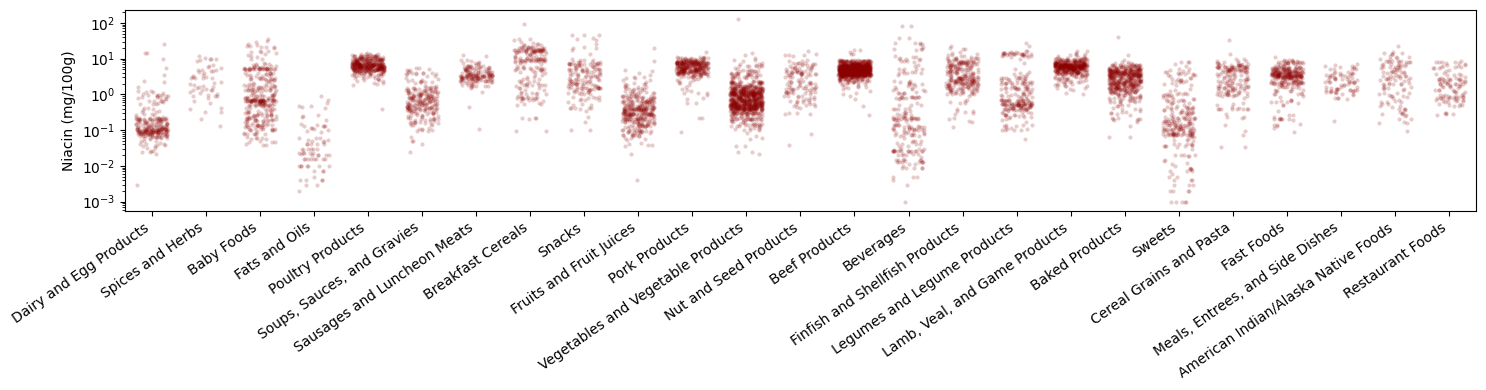

Nutrient Content Stripplots#

from matplotlib import transforms

nutrient_id_lookup_df=food_nutrient_rows[['nutrient_id', 'nutrient_name']].drop_duplicates()

def get_nutrient_id(nutrient_name):

result = nutrient_id_lookup_df[nutrient_id_lookup_df['nutrient_name'] == nutrient_name]['nutrient_id'].values

return result[0] if len(result) > 0 else None

def stripplot_nutrient(df, nutrient_name, units_of_measure):

plt.figure(figsize=(15, 4))

ax = plt.gca()

nutrient_id = get_nutrient_id(nutrient_name)

# Add offset to x-coordinates

sns.stripplot(data=df, x='food_group', y=nutrient_name, color='darkred', alpha=0.2, size=3, jitter=0.3)

plt.xticks(rotation=35, ha='right')

# ax.tick_params(axis='x', which='major', pad=-30, labelright=True, labelleft=False, direction='out')

# ax.set_xticklabels(ax.get_xticklabels(), ha='right', va='bottom', position=(7.0, 0.0))

# ax.set_xticklabels(ax.get_xticklabels(), ha='right', va='bottom')

# plt.xticks(rotation=90)

plt.xlabel('')

# plt.ylabel(f'{nutrient_name} Content ({units_of_measure}/100g)')

plt.ylabel(f'{nutrient_name} ({units_of_measure}/100g)')

plt.yscale('log')

# plt.margins(x=0.02)

plt.tight_layout()

plt.show()

def stripplot_nutrients(df):

# Get unique nutrient-unit pairs

nutrient_pairs = food_nutrient_rows[['nutrient_name', 'unit_of_measure']].drop_duplicates() #.head(1)

for _, row in nutrient_pairs.iterrows():

nutrient = row['nutrient_name']

unit = row['unit_of_measure']

# print(f"\nPlotting {nutrient} ({unit})")

stripplot_nutrient(df, nutrient, unit)

# try:

# except Exception as e:

# print(f"Error plotting {nutrient}: {str(e)}")

stripplot_nutrients(food_rows)

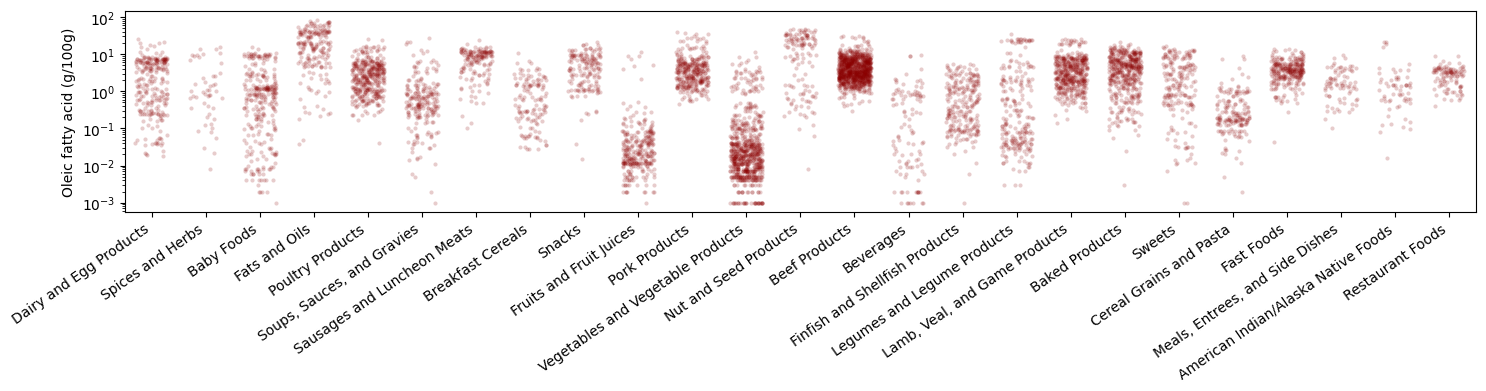

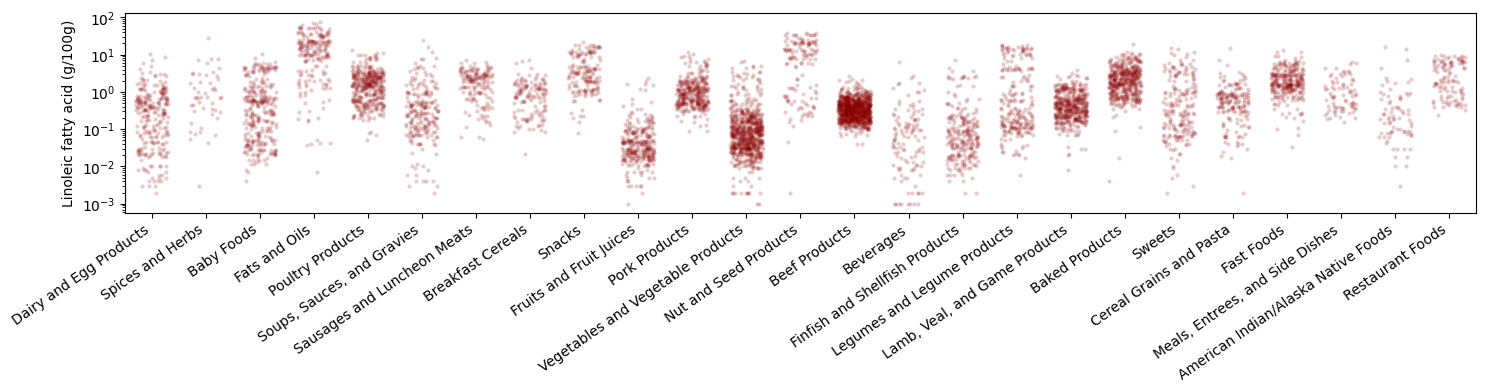

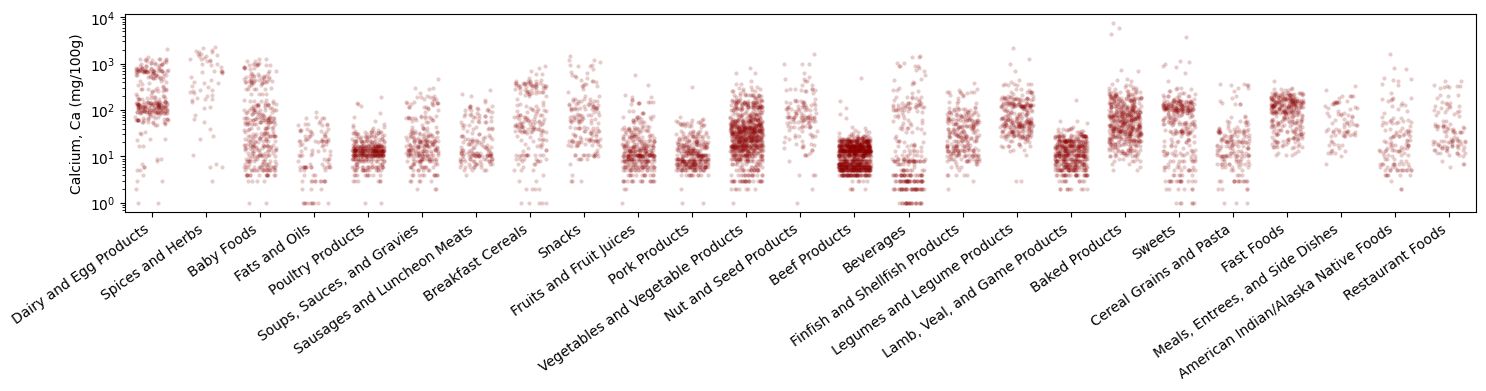

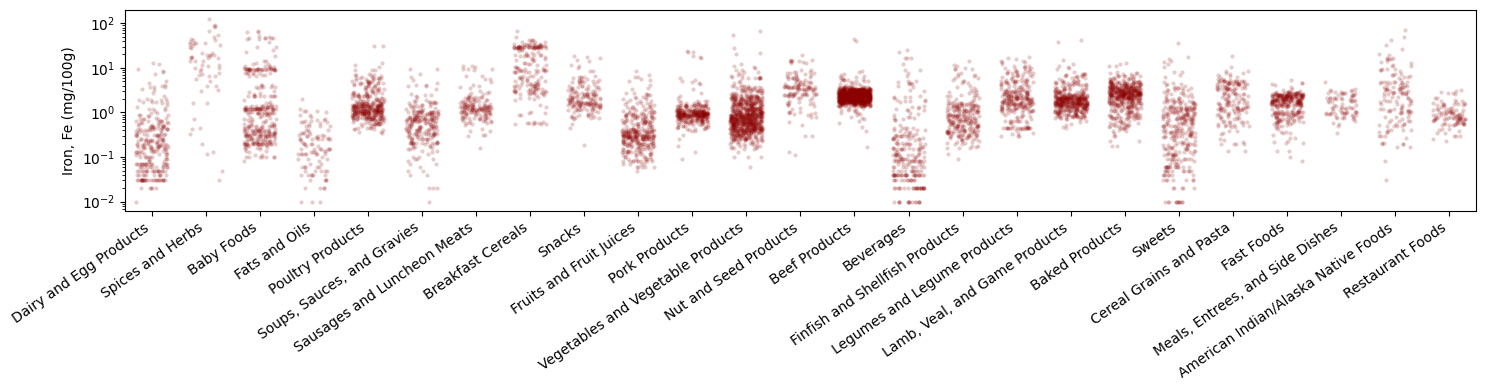

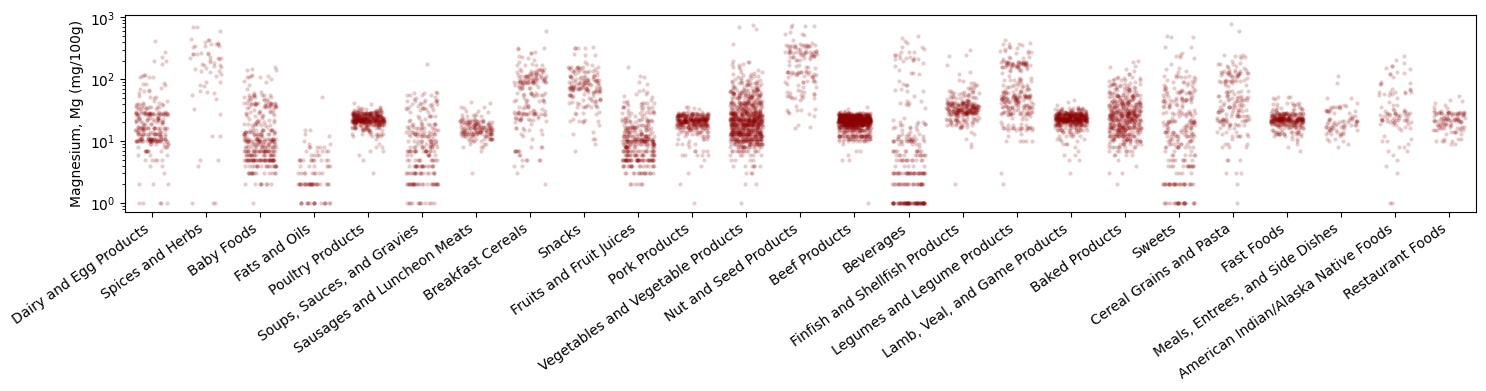

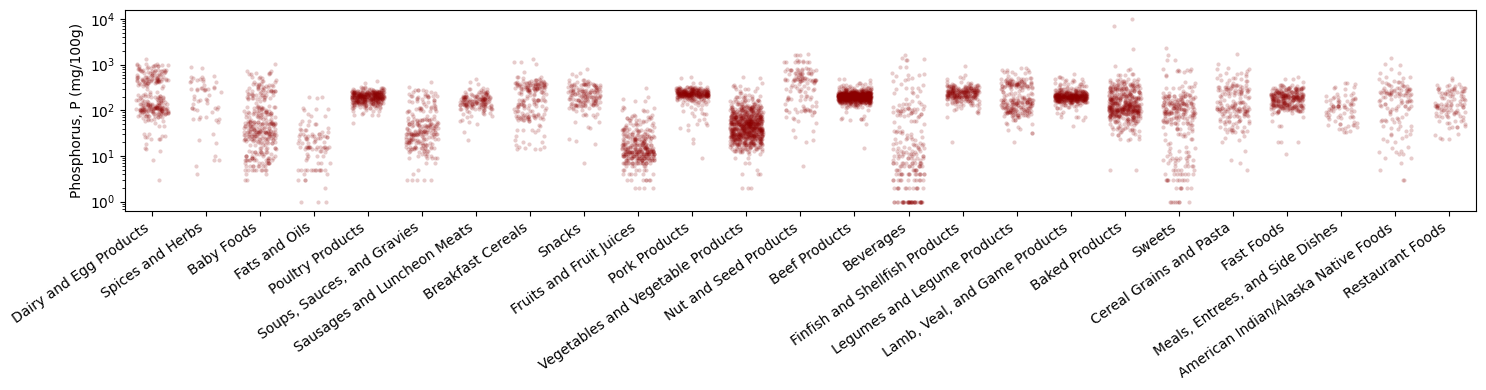

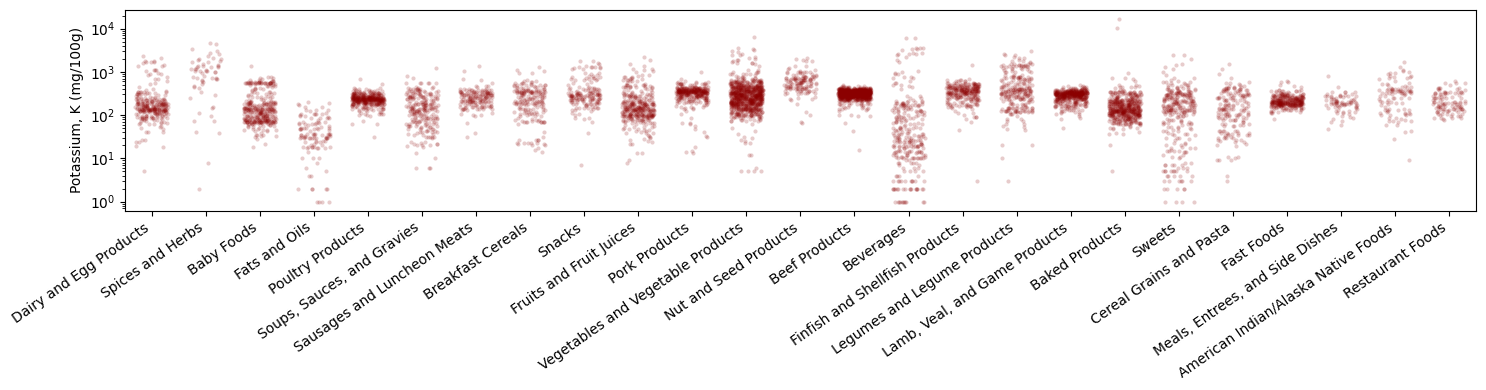

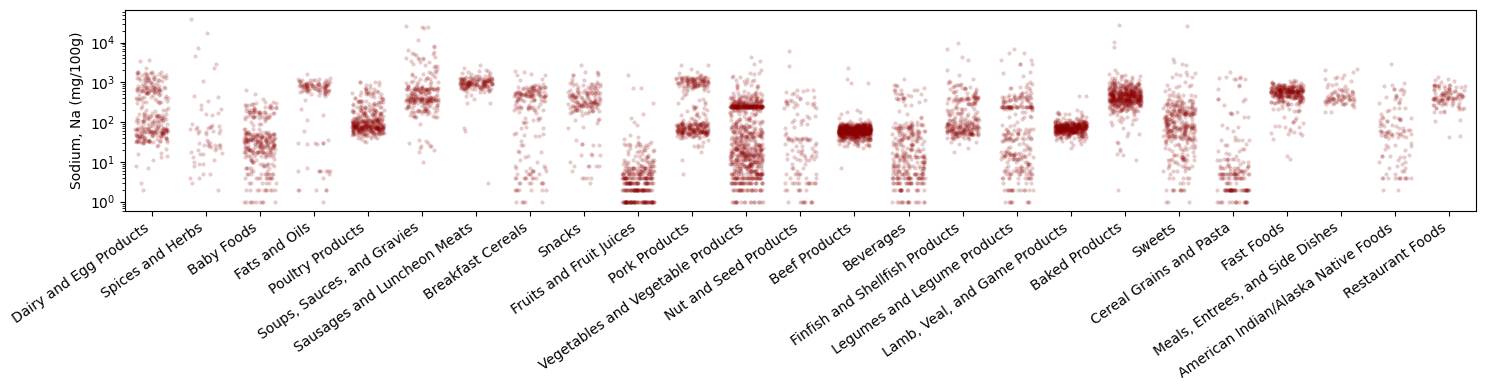

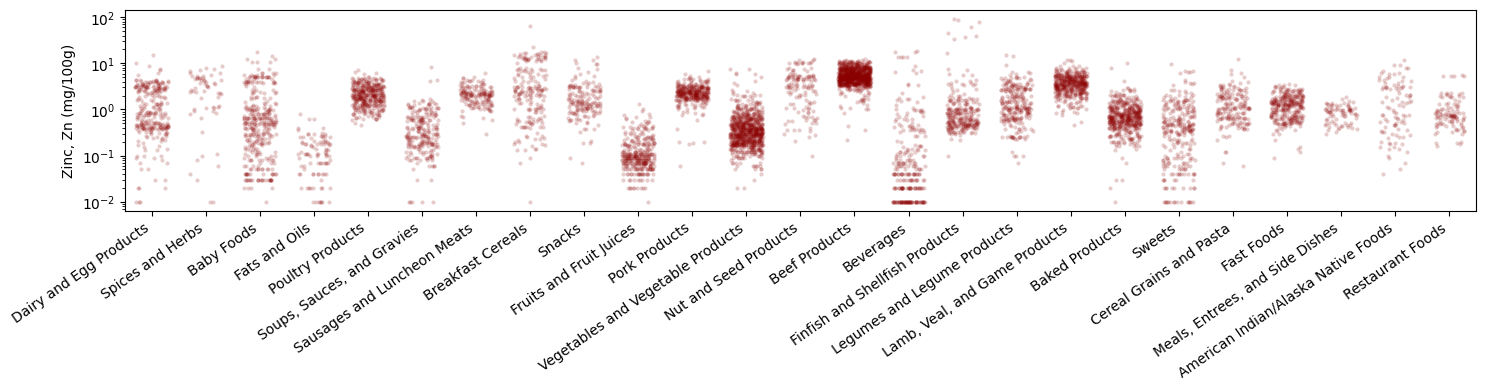

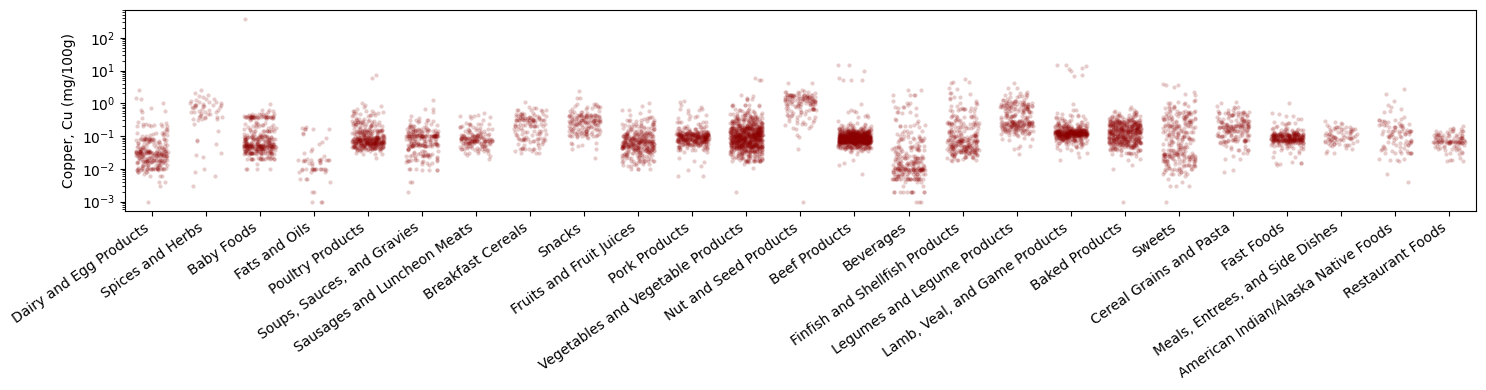

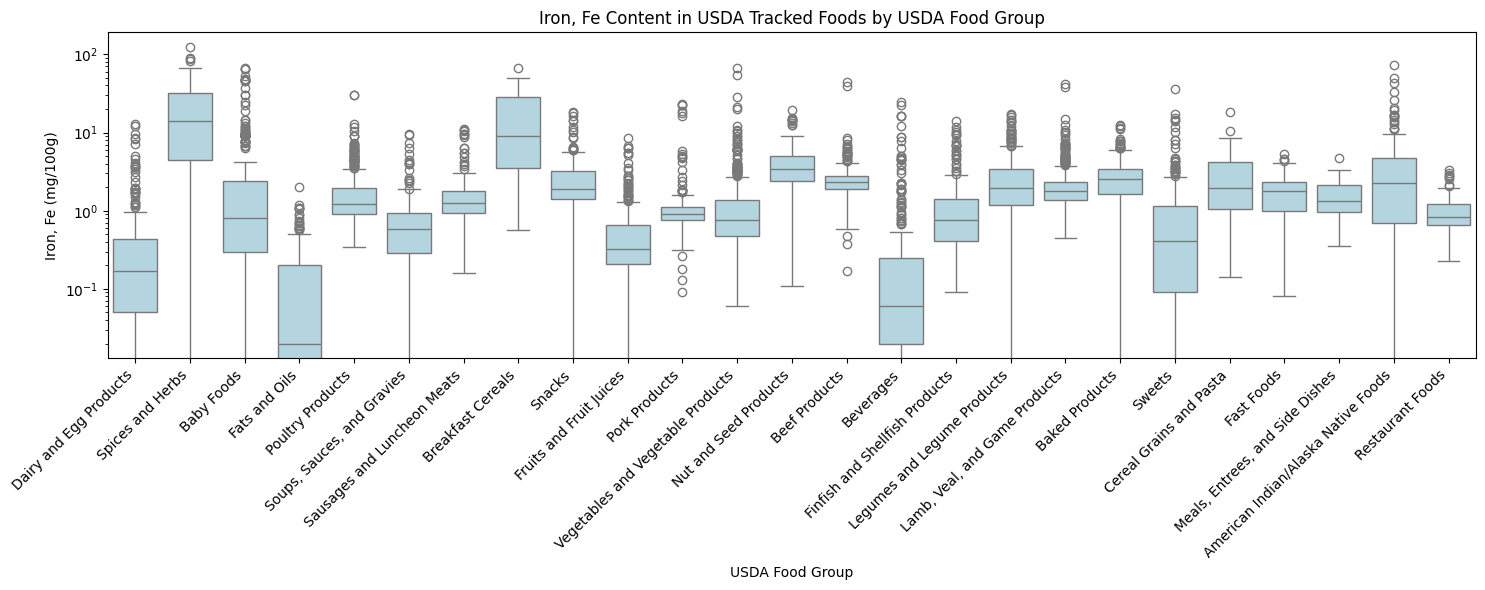

What do stripplots show?#

Stripplots (also known as a jitter plot or dot plot) are used here to show the distribution of nutrients across different food categories from the USDA food database.

We will discuss the Iron strip plot near the middle.

Construction:

The y-axis shows iron (Fe) content in milligrams per 100g of food on a logarithmic scale (note the 10^-2 to 10^2 range)

The x-axis lists various food categories

Each dot represents an individual food item within that category

The dots are spread horizontally within each category’s strip to avoid overlapping (jittering)

The red coloring helps visualize the density of points

What it shows:

Wide variation in iron content both within and between food categories

Some categories like “Baby Foods” and “Meats” show high variability, with items spanning multiple orders of magnitude in iron content

Many categories have a cluster of items in the 1-10 mg/100g range

Some categories (like “Dairy and Egg Products”) tend to have lower iron content

There are some extreme outliers, particularly in categories like “Spices and Herbs” which reach up to 100 mg/100g

The logarithmic scale is particularly important here as it allows visualization of both very small and very large iron contents in the same plot. This type of visualization is useful for nutritionists and food scientists to understand the distribution of iron content across different food types and identify particularly iron-rich or iron-poor categories.

Why the recurring vertical alignment of dots#

Food Processing Standards - Many processed foods are fortified with iron according to standardized amounts set by regulatory agencies. For example, many breakfast cereals and enriched flour products are fortified to meet specific nutritional targets, which would result in multiple products having identical iron content.

Common Iron Sources - Foods that use the same iron-rich ingredient as an additive (like fortified flour or a specific iron compound) would naturally end up with similar iron levels. This is especially common in processed foods from the same manufacturer or category.

Measurement Precision - The data collection method might round measurements to certain significant figures or use standardized testing methods that only measure to a specific precision level, causing different foods to appear to have exactly the same iron content.

Database Estimation - Since this data comes from a nutritional database, some values may be estimated based on similar foods or standard recipes rather than individually measured, leading to identical values being assigned to similar foods.

Serving Size Standardization - When iron content is reported per standard serving size (like per 100g), foods with similar base ingredients but different preparations might end up showing the same iron levels after standardization.

Why are some food groups multimodal#

In “Dairy and Egg Products,” there appear to be at least two distinct clusters: one at a very low iron content (likely milk products, which are notably low in iron) and another at a higher level (likely egg products, which contain more iron). This makes biological sense given the very different nutritional profiles of dairy versus eggs.

The “Spices and Herbs” group shows multiple clusters, which could represent:

Pure spices and dried herbs (typically higher in iron due to concentration)

Spice blends and mixtures (moderate iron levels)

Fresh herbs (lower iron content due to higher water content)

“Breakfast Cereals” also shows clear multimodality, which likely reflects:

Fortified cereals (very high iron content cluster)

Natural/unfortified cereals (lower iron content cluster)

This separation is particularly interesting as it probably represents manufacturer decisions about fortification rather than natural variation.

This multimodality offers valuable insights into both the natural variation in food composition and human interventions like fortification or processing methods.

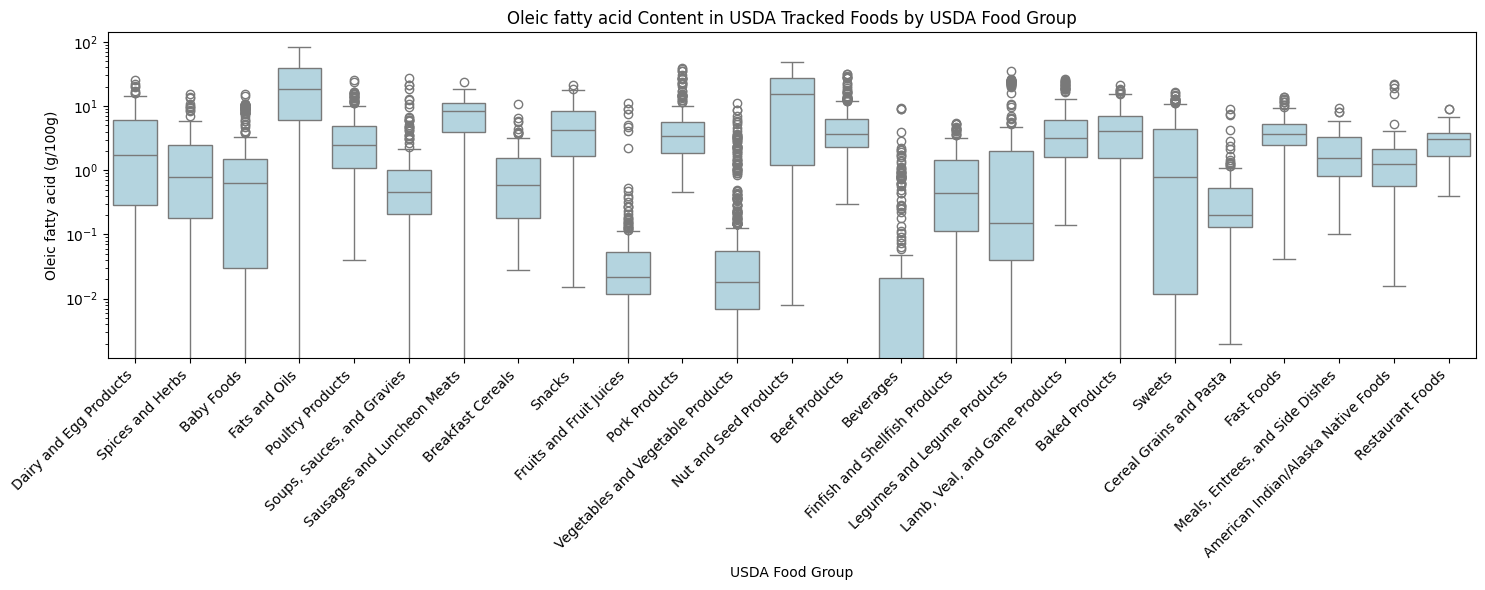

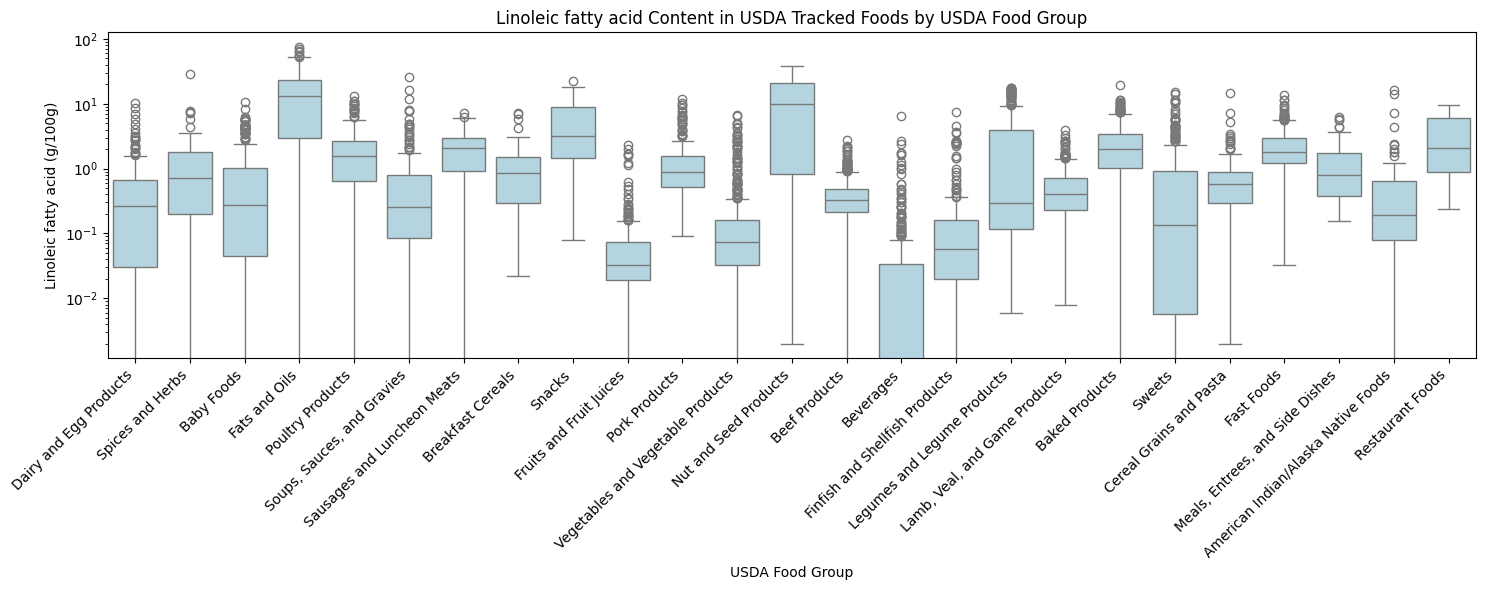

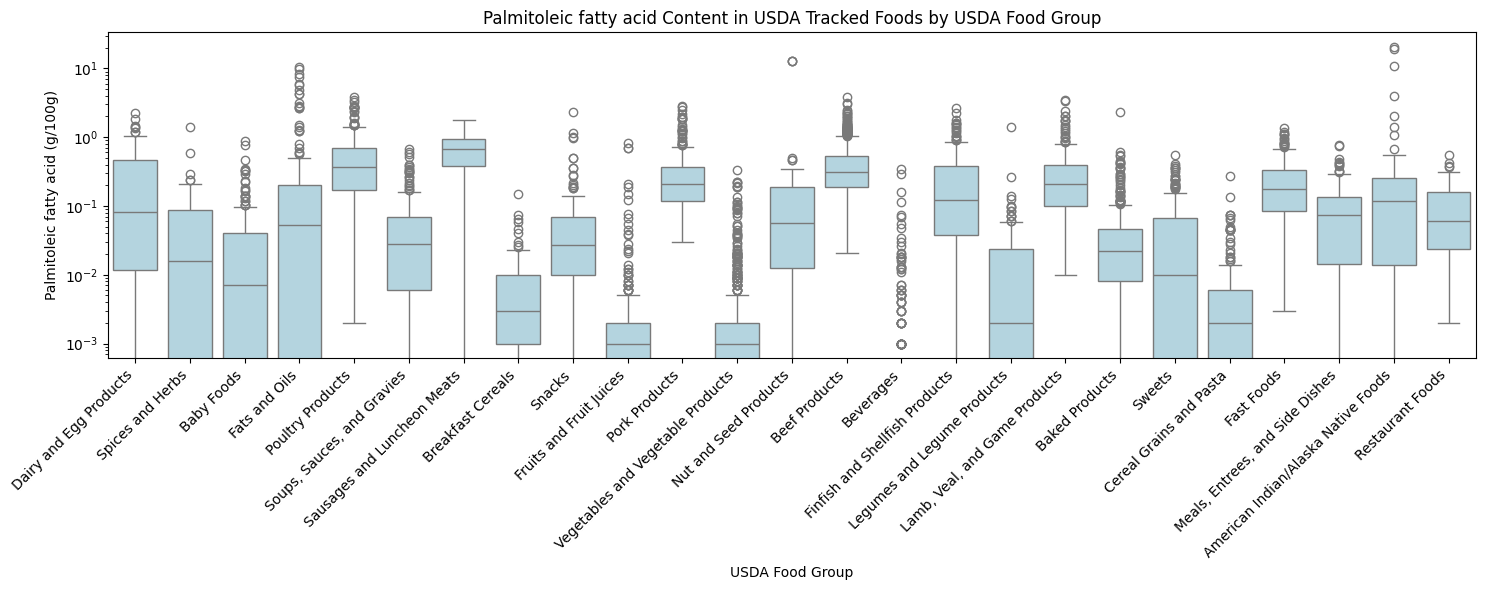

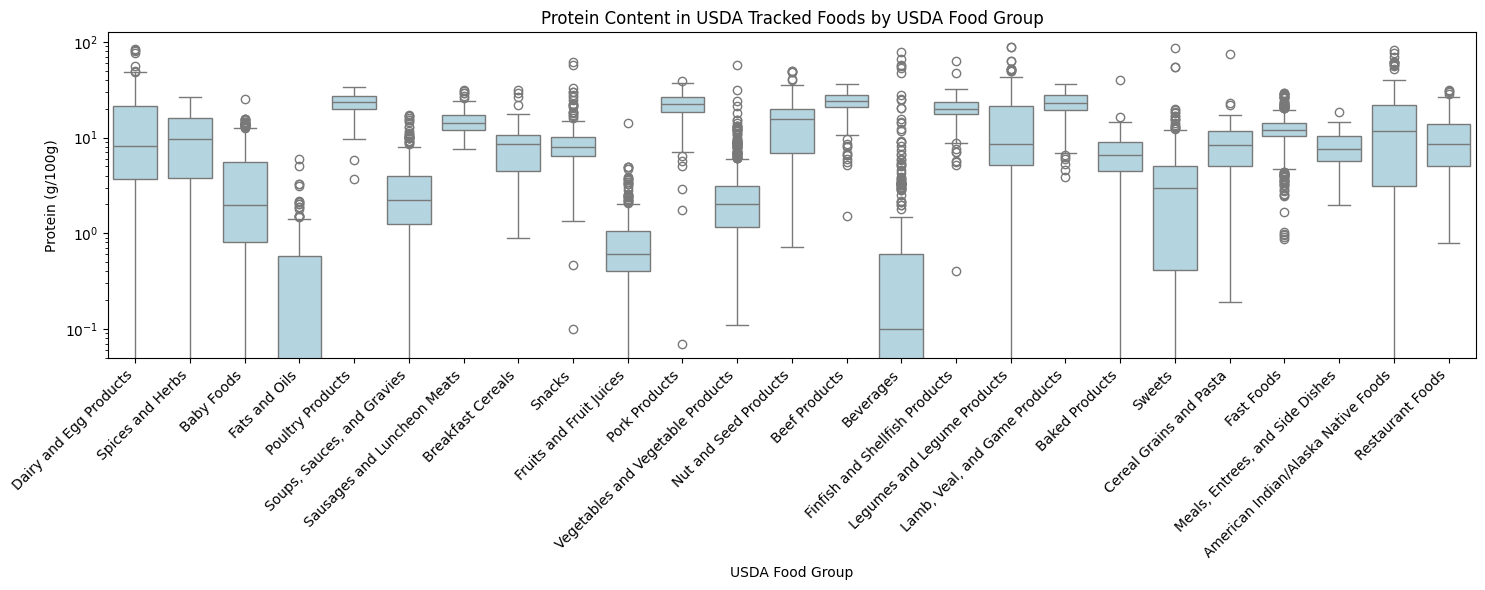

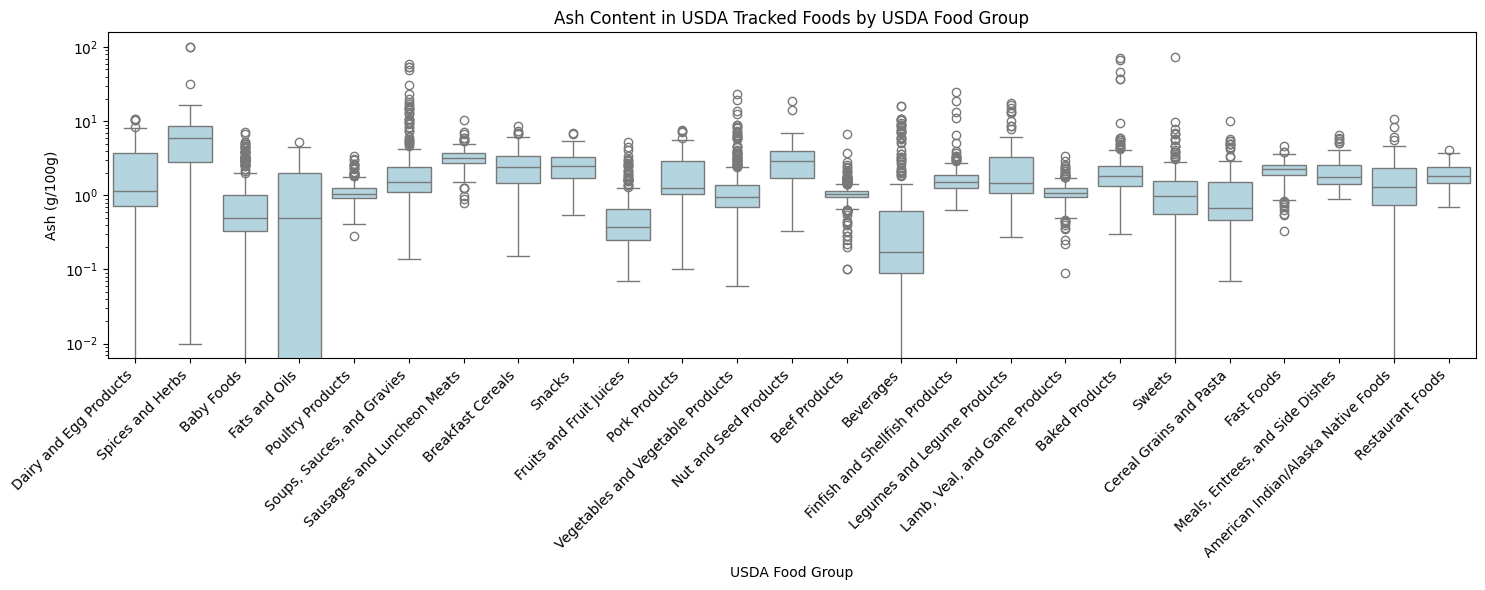

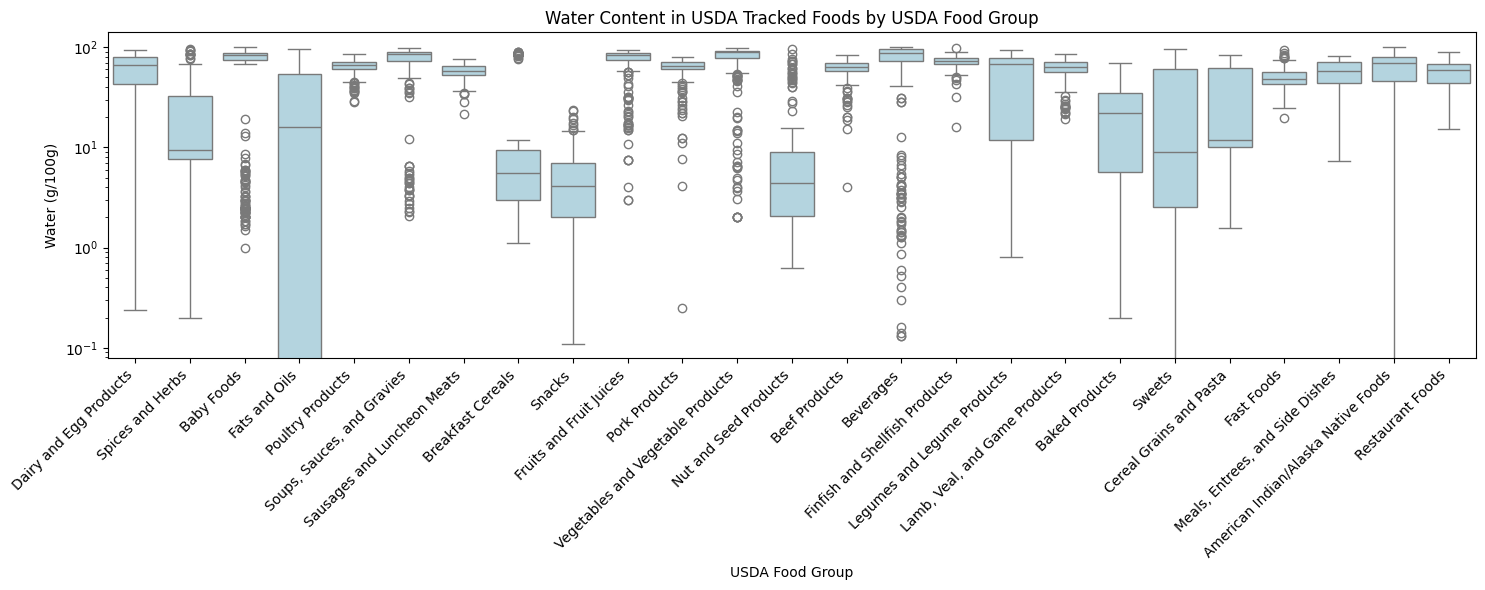

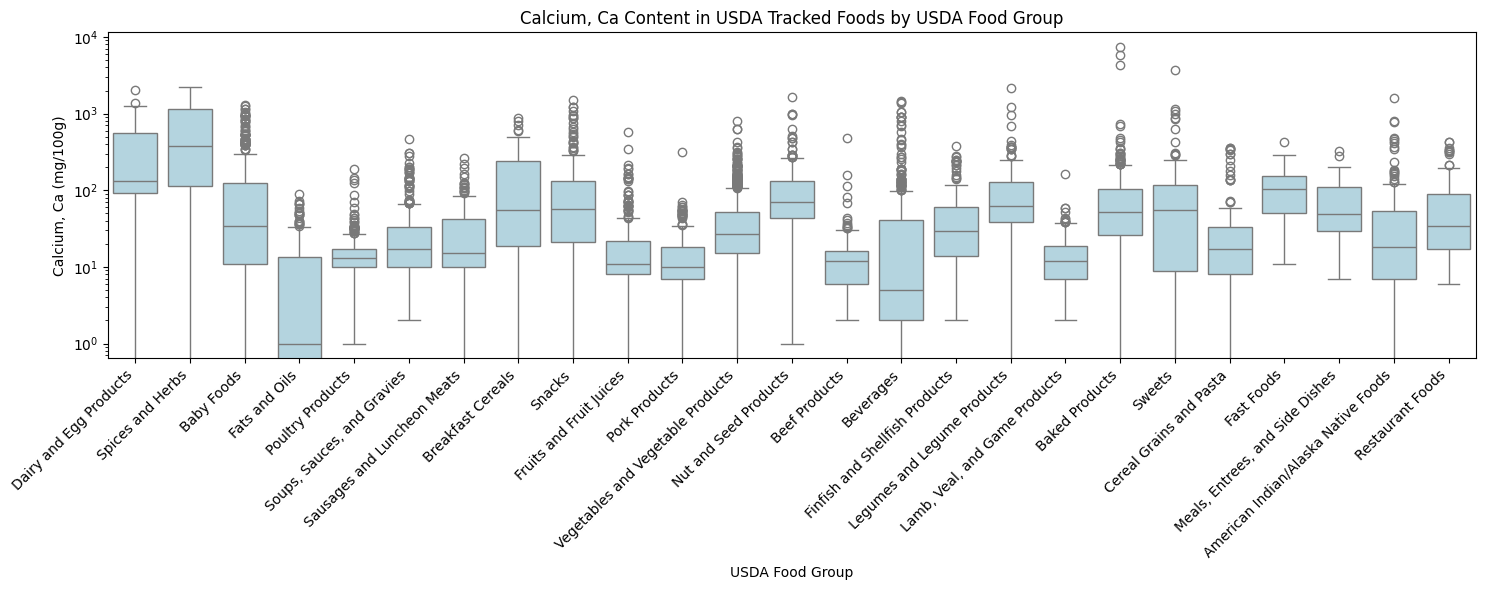

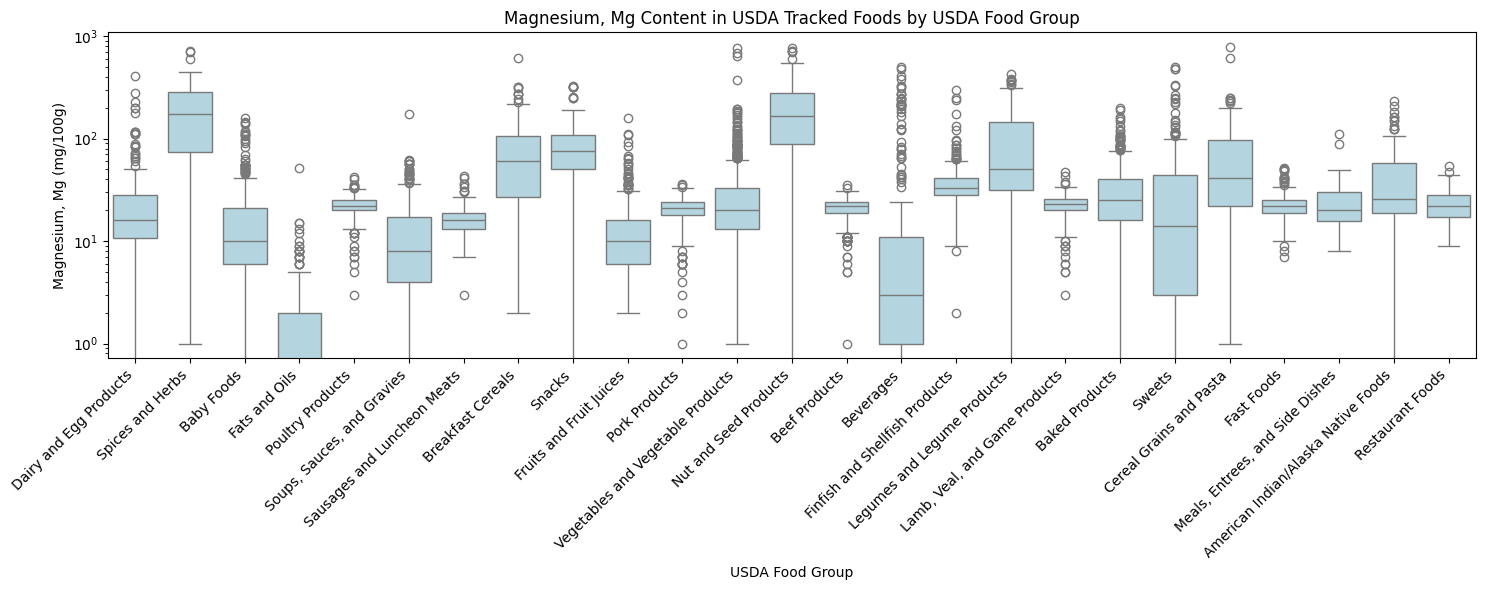

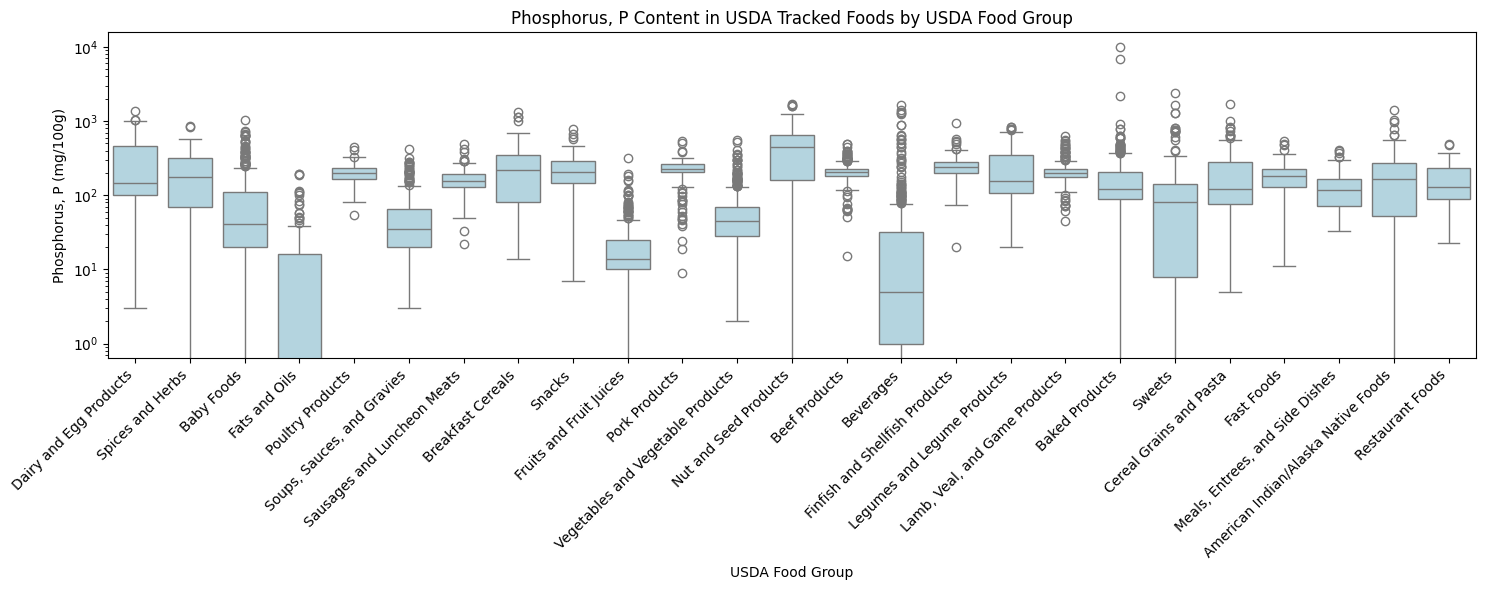

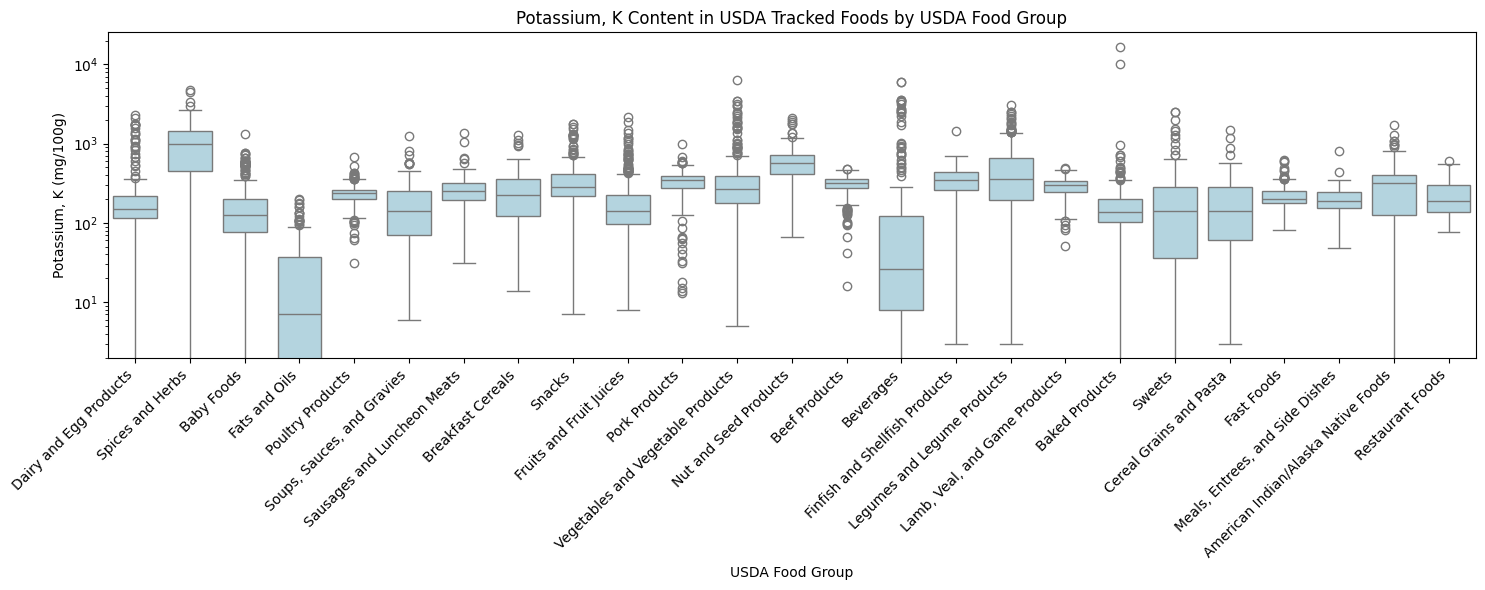

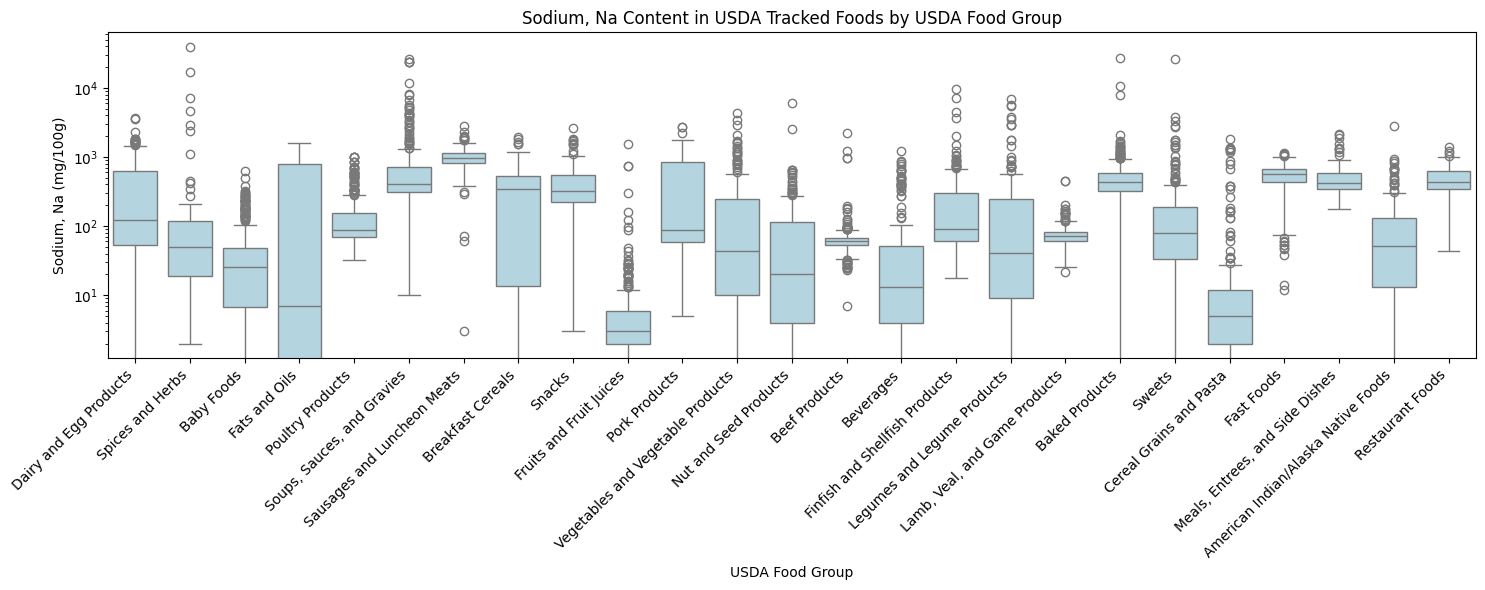

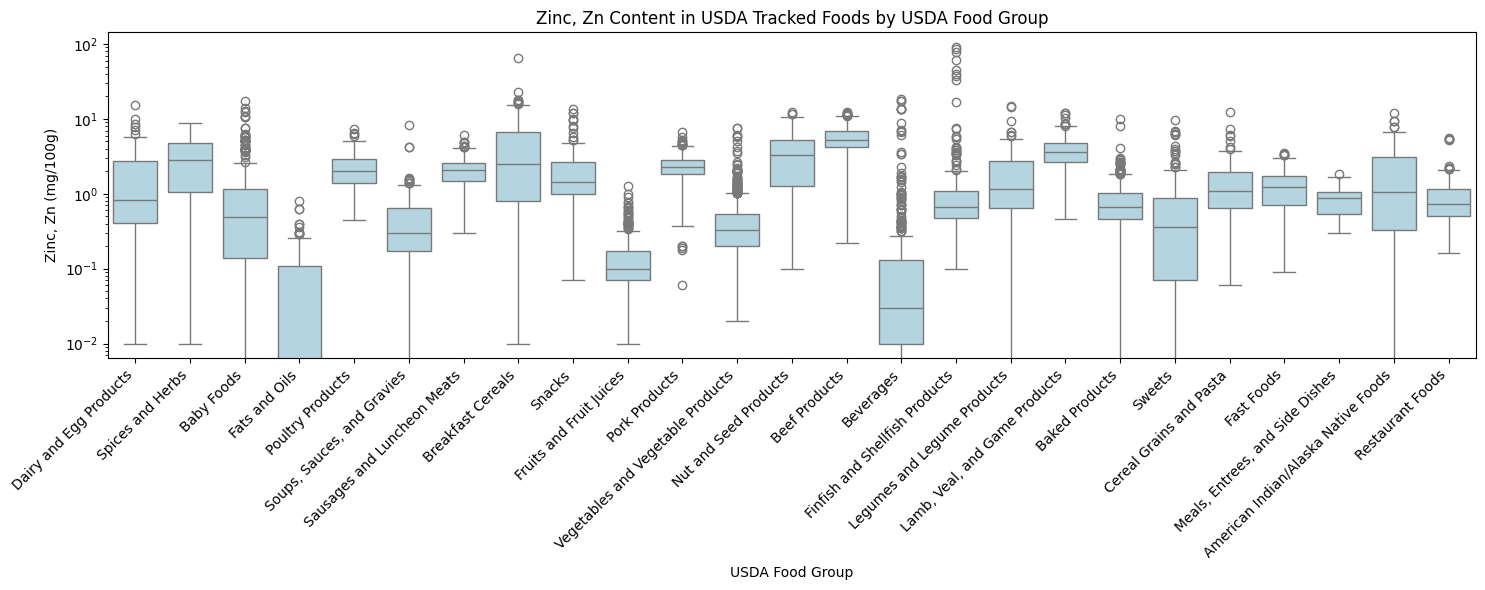

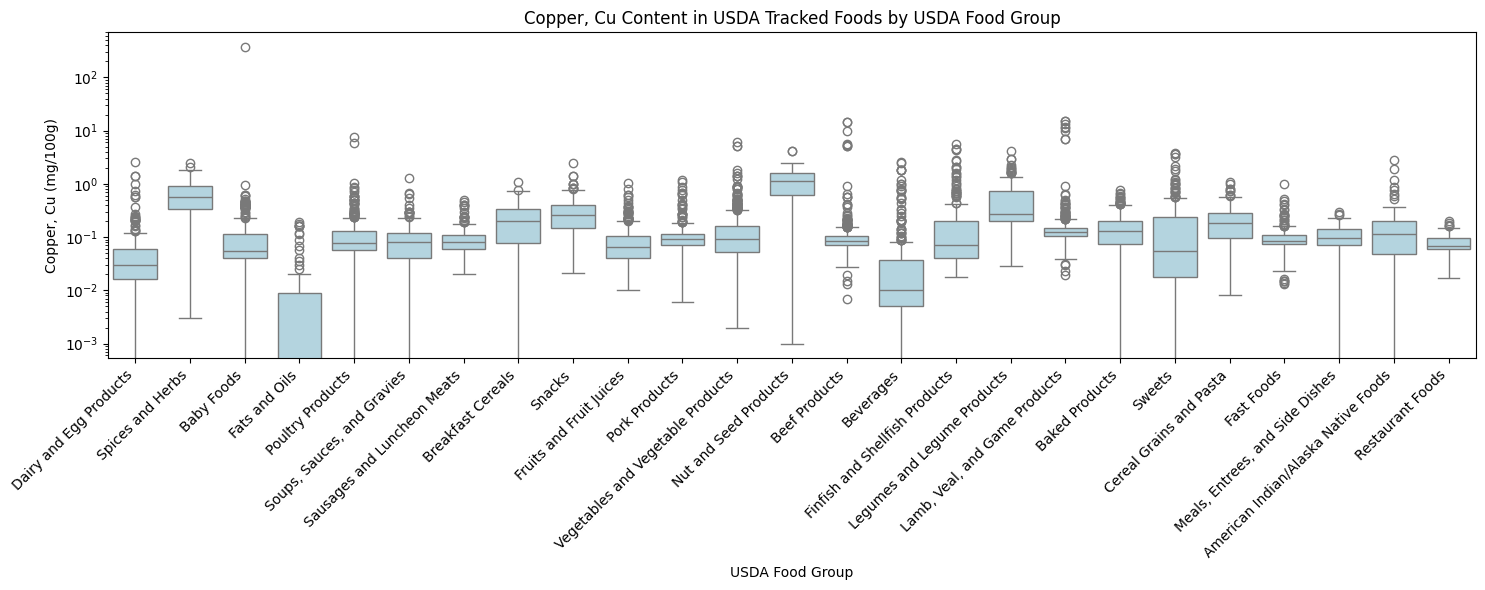

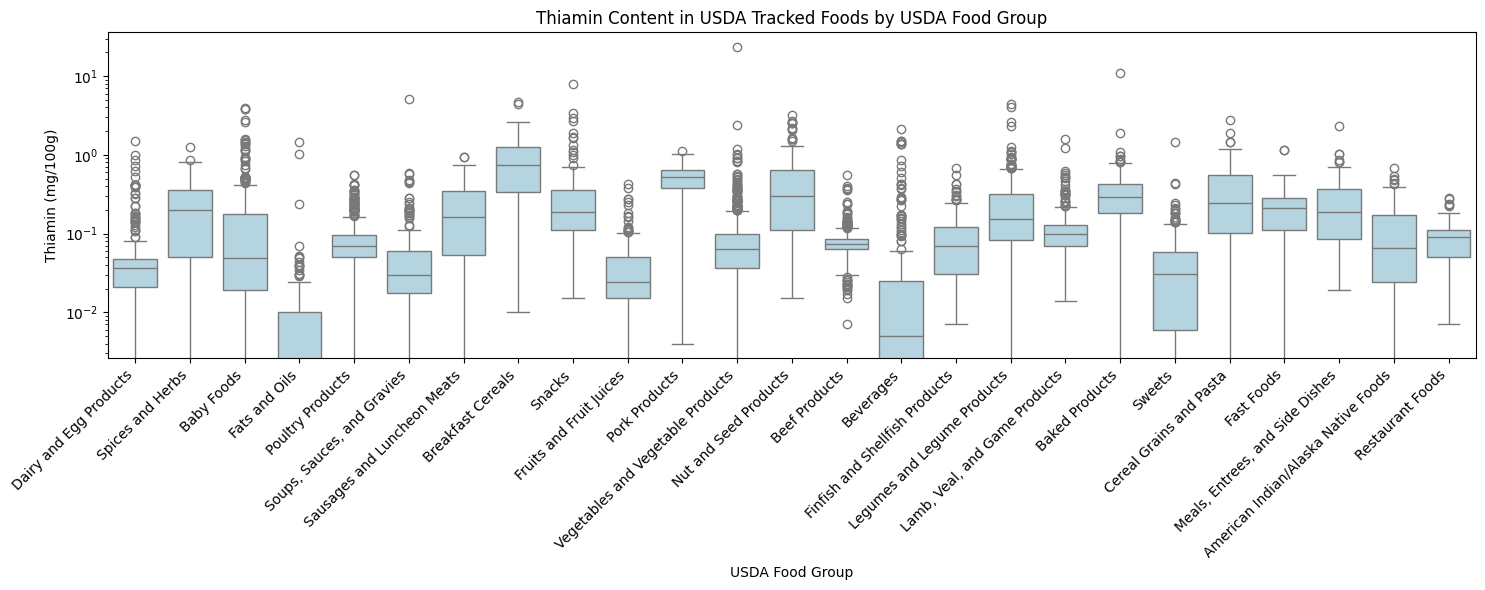

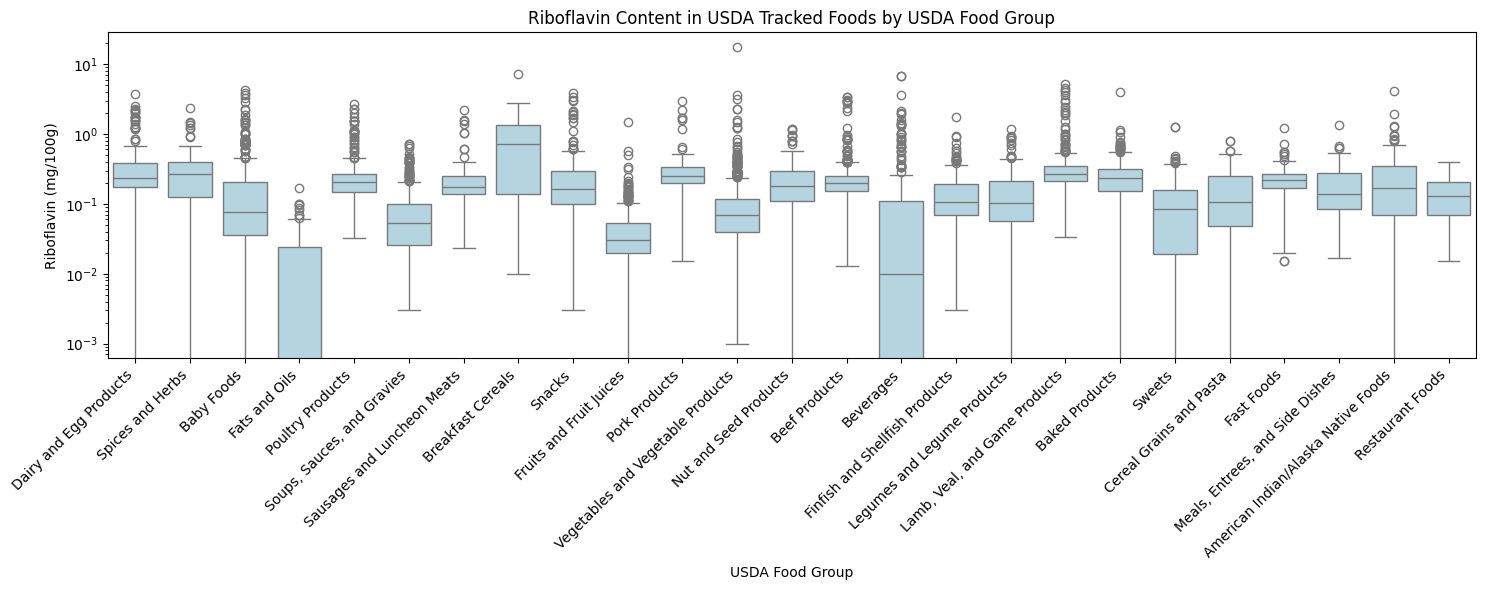

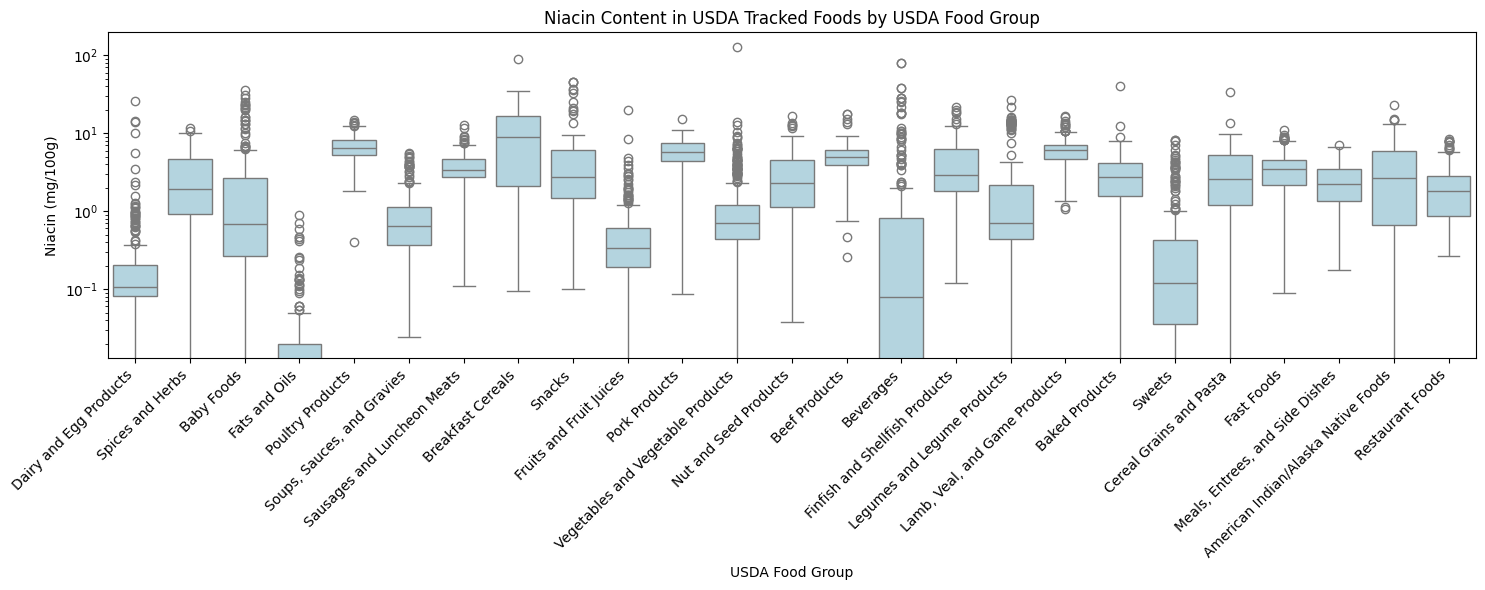

Nutrient Content Boxplots#

def boxplot_nutrient(df, nutrient_name, units_of_measure):

plt.figure(figsize=(15, 6))

sns.boxplot(data=df, x='food_group', y=nutrient_name, color='lightblue')

plt.xticks(rotation=45, ha='right')

plt.xlabel('USDA Food Group')

plt.ylabel(f'{nutrient_name} ({units_of_measure}/100g)')

# plt.ylabel(f'{nutrient_name} Content ({units_of_measure} per 100g Food Portion)')

plt.yscale('log')

plt.title(f'{nutrient_name} Content in USDA Tracked Foods by USDA Food Group')

plt.tight_layout()

plt.show()

def boxplot_nutrients(df):

# Get unique nutrient-unit pairs

nutrient_pairs = food_nutrient_rows[['nutrient_name', 'unit_of_measure']].drop_duplicates()

for _, row in nutrient_pairs.iterrows():

nutrient = row['nutrient_name']

unit = row['unit_of_measure']

# print(f"\nPlotting {nutrient} ({unit})")

try:

boxplot_nutrient(df, nutrient, unit)

except Exception as e:

print(f"Error plotting {nutrient}: {str(e)}")

boxplot_nutrients(food_rows)

How the boxplot is worse than the stripplot#

Multimodality - The stripplot reveals clear multimodal distributions (like in breakfast cereals and dairy/eggs) that are completely obscured in the boxplot. Boxplots assume a single central tendency and can’t represent multiple peaks in the distribution.

Point Density - The stripplot shows exactly where data points cluster densely versus sparsely. For example, you can see if there are many samples at a particular iron content level. Boxplots reduce this to just quartiles and outliers, losing the detailed density information.

Gaps in Distribution - The stripplot reveals clear gaps in some food groups where no samples exist, suggesting natural breaks between subgroups of foods. These gaps are invisible in boxplots since they just show continuous ranges.

Sample Size Differences - The stripplot shows the exact number of samples in each food group through the number of points. While boxplots can be modified to show this, traditional boxplots don’t convey sample size information.

Fine Structure of Outliers - The stripplot shows the precise distribution of outlier points, while boxplots typically collapse these into simple whiskers or individual points, losing information about potential patterns or clusters in the outlier region.

Discretization Effects - Some food groups show horizontal “banding” in the stripplot. Many such interesting features are completely hidden in a boxplot representation.

How the boxplot is better than the stripplot#

The boxplot can be misleading if you assume:

The data is unimodal

The distribution is continuous

The data points are evenly distributed between the quartiles

The boxplot does offer several advantages for this particular dataset:

Immediate Visual Summary - With so many food groups and highly variable iron content, the boxplot makes it much easier to quickly compare the median and quartile ranges between groups. The stripplot’s individual points, while more detailed, can make these quick comparisons more challenging.

Outlier Emphasis - Given this dataset has iron content varying across multiple orders of magnitude, the boxplot’s explicit marking of outliers helps identify extreme values more clearly. In the stripplot, these extreme points blend in with the overall distribution.

Scale Readability - The logarithmic scale combined with dense point clouds in the stripplot can make it difficult to read exact values. The boxplot’s clear quartile boxes and median lines make it easier to read approximate values off the y-axis.

Visual Clutter - Some food groups (like Beef Products) have many samples clustered in a small range, creating significant overplotting in the stripplot where points overlap extensively. The boxplot summarizes this dense information more cleanly.

Space Efficiency - The food group labels on the x-axis are quite long, and the boxplot’s narrower format makes these labels more readable compared to the wider space needed for the stripplot’s point spread.

For a complete analysis, having access to both visualization types is ideal, as they complement each other’s strengths and weaknesses.

Foods Notable for their Iron Content#

# Group by food_group and find min and max iron values

iron_summary = food_rows.groupby('food_group').agg({

'Iron, Fe': ['min', 'max']

}).reset_index()

# Get the food names for min and max values in each group

results = []

for group in food_rows['food_group'].unique():

group_df = food_rows[food_rows['food_group'] == group]

# Find max iron food

max_iron = group_df.loc[group_df['Iron, Fe'].idxmax()]

# Find min iron food

min_iron = group_df.loc[group_df['Iron, Fe'].idxmin()]

results.append({

'Food Group': group,

'Highest Iron Food': max_iron['food_name'],

'Highest Iron (mg/100g)': max_iron['Iron, Fe'],

'Lowest Iron Food': min_iron['food_name'],

'Lowest Iron (mg/100g)': min_iron['Iron, Fe']

})

# Create DataFrame from results

summary_df = pd.DataFrame(results)

# Display results

print("\nHighest and Lowest Iron Content Foods by Food Group:")

display(summary_df)

Highest and Lowest Iron Content Foods by Food Group:

| Food Group | Highest Iron Food | Highest Iron (mg/100g) | Lowest Iron Food | Lowest Iron (mg/100g) | |

|---|---|---|---|---|---|

| 0 | Dairy and Egg Products | Beverage, instant breakfast powder, chocolate,... | 12.82 | Butter oil, anhydrous | 0.00 |

| 1 | Spices and Herbs | Spices, thyme, dried | 123.60 | Seasoning mix, dry, sazon, coriander & annatto | 0.00 |

| 2 | Baby Foods | Babyfood, cereal, oatmeal, with honey, dry | 67.23 | Babyfood, water, bottled, GERBER, without adde... | 0.00 |

| 3 | Fats and Oils | Butter replacement, without fat, powder | 2.00 | Fat, beef tallow | 0.00 |

| 4 | Poultry Products | Duck, domesticated, liver, raw | 30.53 | Chicken, broilers or fryers, breast, skinless,... | 0.34 |

| 5 | Soups, Sauces, and Gravies | Gravy, instant turkey, dry | 9.57 | Soup, HEALTHY CHOICE Chicken Noodle Soup, canned | 0.00 |

| 6 | Sausages and Luncheon Meats | Braunschweiger (a liver sausage), pork | 11.20 | Dutch brand loaf, chicken, pork and beef | 0.16 |

| 7 | Breakfast Cereals | Cereals ready-to-eat, RALSTON Enriched Wheat B... | 67.67 | Cereals, WHEATENA, cooked with water | 0.56 |

| 8 | Snacks | Formulated bar, MARS SNACKFOOD US, SNICKERS MA... | 18.15 | Rice crackers | 0.00 |

| 9 | Fruits and Fruit Juices | Baobab powder | 8.42 | Pears, asian, raw | 0.00 |

| 10 | Pork Products | Pork, fresh, variety meats and by-products, li... | 23.30 | Pork, fresh, variety meats and by-products, le... | 0.09 |

| 11 | Vegetables and Vegetable Products | Seaweed, Canadian Cultivated EMI-TSUNOMATA, dry | 66.38 | Waterchestnuts, chinese, (matai), raw | 0.06 |

| 12 | Nut and Seed Products | Seeds, sesame butter, paste | 19.20 | Seeds, sisymbrium sp. seeds, whole, dried | 0.11 |

| 13 | Beef Products | Beef, variety meats and by-products, spleen, raw | 44.55 | Beef, variety meats and by-products, suet, raw | 0.17 |

| 14 | Beverages | Beverages, UNILEVER, SLIMFAST Shake Mix, high ... | 24.84 | Alcoholic beverage, beer, regular, BUDWEISER | 0.00 |

| 15 | Finfish and Shellfish Products | Mollusks, clam, mixed species, cooked, breaded... | 13.91 | Fish, wolffish, Atlantic, raw | 0.09 |

| 16 | Legumes and Legume Products | Peanut butter, chunky, vitamin and mineral for... | 17.50 | SILK Coffee, soymilk | 0.00 |

| 17 | Lamb, Veal, and Game Products | Lamb, variety meats and by-products, spleen, raw | 41.89 | Veal, breast, separable fat, cooked | 0.45 |

| 18 | Baked Products | Archway Home Style Cookies, Reduced Fat Ginger... | 12.57 | Leavening agents, baking soda | 0.00 |

| 19 | Sweets | Cocoa, dry powder, unsweetened, HERSHEY'S Euro... | 36.00 | Topping, SMUCKER'S MAGIC SHELL | 0.00 |

| 20 | Cereal Grains and Pasta | Rice bran, crude | 18.54 | Rice, white, glutinous, unenriched, cooked | 0.14 |

| 21 | Fast Foods | BURGER KING, DOUBLE WHOPPER, with cheese | 5.30 | McDONALD'S, Hot Caramel Sundae | 0.08 |

| 22 | Meals, Entrees, and Side Dishes | Rice mix, cheese flavor, dry mix, unprepared | 4.74 | Rice bowl with chicken, frozen entree, prepare... | 0.35 |

| 23 | American Indian/Alaska Native Foods | Whale, beluga, meat, dried (Alaska Native) | 72.35 | Oil, beluga, whale (Alaska Native) | 0.00 |

| 24 | Restaurant Foods | DENNY'S, top sirloin steak | 3.27 | Restaurant, Latino, arroz con leche (rice pudd... | 0.23 |

Observations:

Most Striking Iron Content:

The highest overall iron content is found in dried thyme (Spices and Herbs) at 123.60 mg. Dried thyme contains nearly twice as much iron (123.60 mg) as the next highest food item, demonstrating how concentrated herbs can be an unexpected source of essential nutrients however herbs are typically consumed in small quantities.

Many foods have 0.00 mg iron content, particularly in categories like Beverages, Baked Products, and Fruits

Interesting Contrasts:

In Dairy and Egg Products, there’s a dramatic range from 12.82 mg (instant breakfast powder) to 0.00 mg (Greek yogurt)

Breakfast Cereals show a notable difference between enriched wheat (67.67 mg) and basic cooked WHEATENA (0.56 mg), highlighting the impact of fortification

Organ Meats - Several of the highest iron contents come from organ meats like liver:

Duck liver (30.53 mg)

Braunschweiger liver sausage (11.20 mg)

Spleen in both beef and lamb categories (44.55 mg and 41.89 mg respectively)

Surprising Findings:

Seaweed (Canadian Cultivated EMI-TSUNOMATA) has a remarkably high iron content at 66.38 mg

Fast food items have relatively low iron content even at their highest (BURGER KING WHOPPER at 5.30 mg)

Raw soybeans have a notably high iron content (15.70 mg) compared to other legumes

Traditional vs. Processed:

Many of the highest iron contents come from either unprocessed natural foods (organs, seeds) or fortified processed foods (cereals, instant breakfast powders)

The lowest iron contents often appear in refined or heavily processed foods

DATA QUALITY ASSESSMENT#

Analysis of Missing and Invalid Values#

def analyze_negative_percentages(df):

# Get total rows

total_rows = len(df)

# Select numeric columns, excluding IDs and names

nutrition_cols = [col for col in df.columns if col not in ['food_id', 'food_name', 'food_group','cluster','embedding']]

numeric_cols = df[nutrition_cols].select_dtypes(include=['int64', 'float64']).columns

# Calculate negative value statistics

neg_counts = (df[numeric_cols] < 0).sum()

neg_percentages = (neg_counts / total_rows * 100).round(2)

min_values = df[numeric_cols].min().round(4)

# Create analysis DataFrame

neg_analysis = pd.DataFrame({

'Nutritional Component': neg_percentages.index,

'Negative Count': neg_counts.values,

'Negative Percentage': neg_percentages.values,

'Minimum Value': min_values

})

# Sort and filter to show only columns with negative values

neg_analysis = neg_analysis[neg_analysis['Negative Count'] > 0]

neg_analysis = neg_analysis.sort_values('Negative Percentage', ascending=False)

neg_analysis = neg_analysis.reset_index(drop=True)

# Display results

if len(neg_analysis) > 0:

display(neg_analysis)

print(f"\nFound {len(neg_analysis)} columns with negative values out of {len(numeric_cols)} numeric columns")

else:

print("No negative values found in the dataset")

return

return neg_analysis

analyze_negative_percentages(food_rows)

def analyze_na_percentages(df):

total_rows = len(df)

nutrition_cols = [col for col in df.columns if col not in ['food_id', 'food_name', 'food_group', 'cluster','embedding', 'source_type',]]

na_percentages = (df[nutrition_cols].isna().sum() / total_rows * 100).round(2)

# Check if there are any NA values

if na_percentages.sum() == 0:

print("No NA values found in the dataset")

return

na_analysis = pd.DataFrame({

'Nutritional Component': na_percentages.index,

'NA Percentage': na_percentages.values

})

na_analysis = na_analysis.sort_values('NA Percentage', ascending=False)

na_analysis = na_analysis.reset_index(drop=True)

display(na_analysis)

# print("all food_rows")

# analyze_na_percentages(food_rows)

print("USDA measured food_rows (aka ['source_type'].isin(['1'] )")

analyze_na_percentages(food_rows[food_rows['source_type'].isin(['1'])])

print("USDA estimated food_rows (aka ['source_type'].isin(['4', '7', '8', '9'] ) ")

analyze_na_percentages(food_rows[food_rows['source_type'].isin(['4', '7', '8', '9'])])

No negative values found in the dataset

USDA measured food_rows (aka ['source_type'].isin(['1'] )

| Nutritional Component | NA Percentage | |

|---|---|---|

| 0 | Palmitoleic fatty acid | 10.02 |

| 1 | Oleic fatty acid | 6.13 |

| 2 | Linoleic fatty acid | 6.09 |

| 3 | Thiamin | 2.70 |

| 4 | Riboflavin | 2.68 |

| 5 | Niacin | 2.67 |

| 6 | Copper, Cu | 1.98 |

| 7 | Zinc, Zn | 1.77 |

| 8 | Magnesium, Mg | 1.53 |

| 9 | Phosphorus, P | 1.53 |

| 10 | Potassium, K | 1.15 |

| 11 | Calcium, Ca | 0.07 |

| 12 | Sodium, Na | 0.04 |

| 13 | Ash | 0.00 |

| 14 | Iron, Fe | 0.00 |

| 15 | Protein | 0.00 |

| 16 | Water | 0.00 |

USDA estimated food_rows (aka ['source_type'].isin(['4', '7', '8', '9'] )

| Nutritional Component | NA Percentage | |

|---|---|---|

| 0 | Palmitoleic fatty acid | 16.02 |

| 1 | Oleic fatty acid | 15.64 |

| 2 | Linoleic fatty acid | 15.64 |

| 3 | Copper, Cu | 14.14 |

| 4 | Thiamin | 8.67 |

| 5 | Phosphorus, P | 8.56 |

| 6 | Magnesium, Mg | 8.56 |

| 7 | Niacin | 8.51 |

| 8 | Zinc, Zn | 8.40 |

| 9 | Riboflavin | 7.46 |

| 10 | Potassium, K | 5.64 |

| 11 | Ash | 0.17 |

| 12 | Calcium, Ca | 0.11 |

| 13 | Iron, Fe | 0.00 |

| 14 | Protein | 0.00 |

| 15 | Sodium, Na | 0.00 |

| 16 | Water | 0.00 |

Missing Data Map#

def missing_values_map3(df):

# Create binary matrix of missing values (True/False)

# missing_matrix = df.isna()

missing_matrix = df.loc[:, df.columns.str.startswith('n:')]

cluster_map = sns.clustermap(

data=missing_matrix,

cmap=sns.color_palette(['red', 'yellow', 'green'], as_cmap=True),

xticklabels=True,

yticklabels=False,

# figsize=(30, 20),

# figsize=(30, 12),

# figsize=(32, 16),

figsize=(32, 16),

method='average',

metric='euclidean',

row_cluster=True,

col_cluster=True,

cbar_pos=None # Removes colorbar

)

# Remove dendrograms while keeping clustering

cluster_map.ax_row_dendrogram.set_visible(False)

cluster_map.ax_col_dendrogram.set_visible(False)

plt.xlabel('USDA Tracked Nutrients (148 distinct, clustered)')

plt.ylabel('USDA Tracked Foods (7793 distinct, clustered)')

plt.title('Nutrient Data Availability Clustermap: USDA Measured (green), USDA Assumed (yellow), Missing Data (red)')

# plt.title('Stripplot of Iron Content in Foods by Food Group')

plt.tight_layout()

plt.show()

# missing_values_map(food_rows)

missing_values_map3(data_source_food_rows)

/root/fnana/fnana_venv/lib/python3.10/site-packages/seaborn/matrix.py:560: UserWarning: Clustering large matrix with scipy. Installing `fastcluster` may give better performance.

warnings.warn(msg)

/root/fnana/fnana_venv/lib/python3.10/site-packages/seaborn/matrix.py:560: UserWarning: Clustering large matrix with scipy. Installing `fastcluster` may give better performance.

warnings.warn(msg)

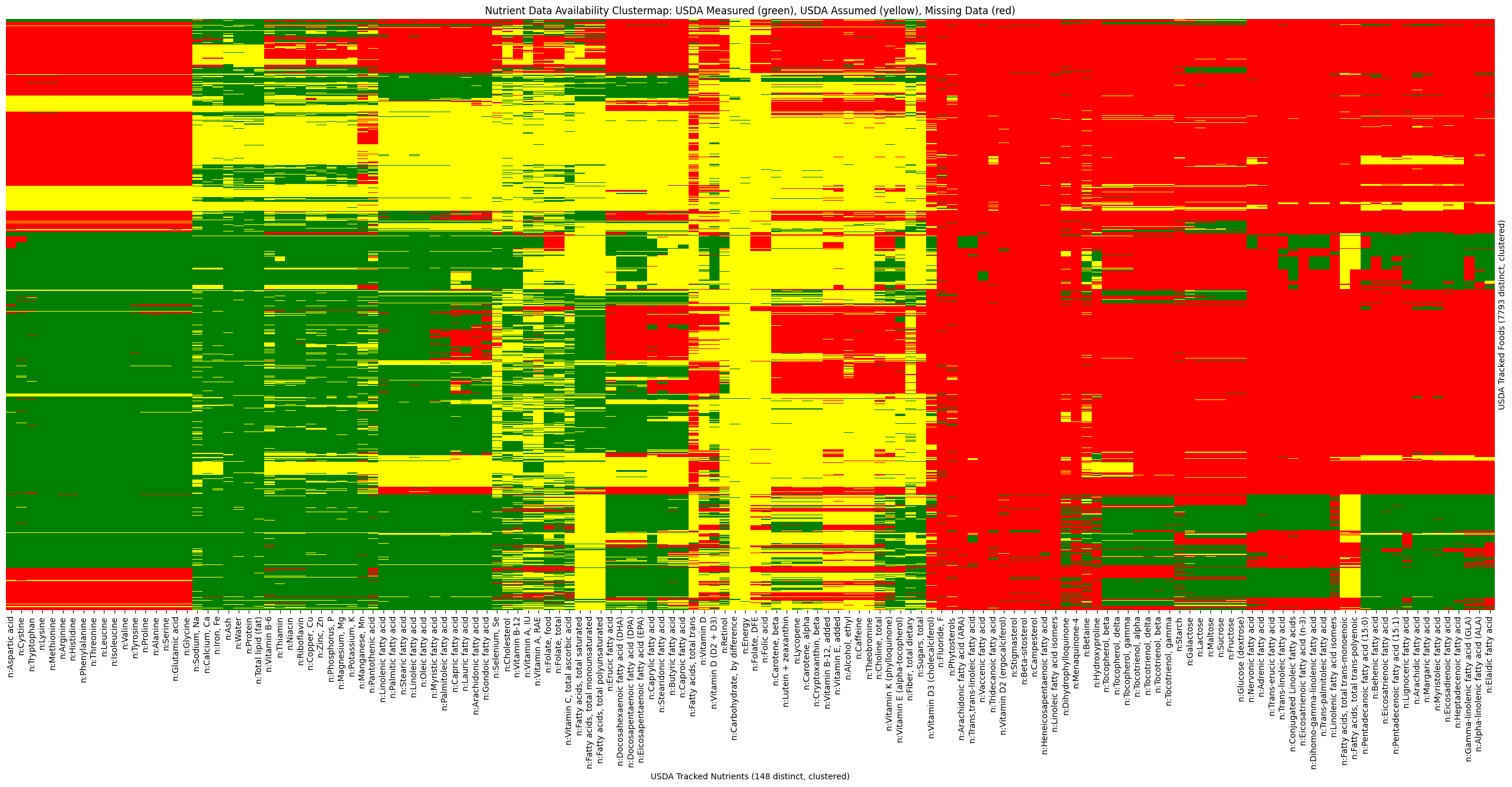

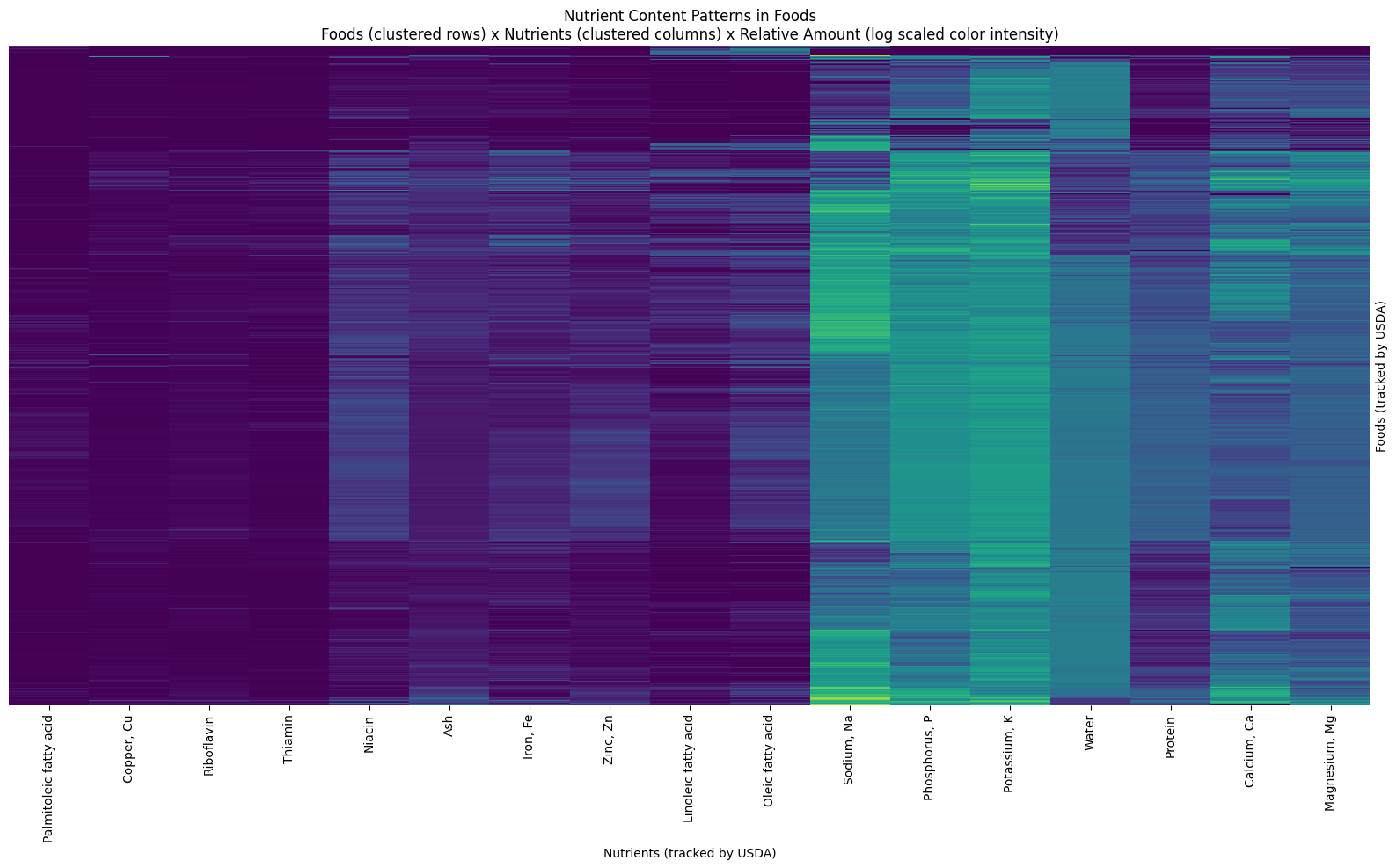

This clustered heatmap visualizes nutrient data availability in the USDA database for 7,793 foods (y-axis) across 148 nutrients (x-axis). The visualization uses color coding: green for measured data (from lab testing), yellow for assumed/calculated data, and red for missing data. Similar patterns in both foods and nutrients are clustered together.

Key findings show systematic data collection patterns:

Core nutrients are well-documented (green) in left/center regions

Complex measurements (fatty acids, specific vitamins, minerals) show more gaps (red), clustered on the right

Estimated values (yellow) appear throughout but concentrate in the center

Missing data often occurs in related nutrient groups across the same foods (horizontal red bands)

The clustering reveals that data completeness likely corresponds to measurement priority, cost, and methodology availability in food analysis.

Using the USDA’s SR-Legacy_Doc.pdf MEASURED vs ASSUMED are classified using USDA table src_cd as follows:

SELECT

...

CASE

WHEN sc.src_cd::int IN (1, 6, 12, 13) THEN 'measured'

WHEN sc.src_cd::int IN (4, 7, 9, 8, 11, 5) THEN 'assumed'

END AS data_source

...

FROM

src_cd sc

...

;

Missing Values Histogram#

def analyze_missing_data(df, figsize=(15, 8), save_path=None):

# Calculate missing value statistics

missing_counts = df.isnull().sum()

total_cells = np.prod(df.shape)

missing_cells = missing_counts.sum()

# Calculate percentages

missing_percentages = (missing_counts / len(df) * 100).round(2)

missing_percentages = missing_percentages[missing_percentages > 0].sort_values(ascending=True)

# Create summary statistics

stats = {

'total_missing': int(missing_cells),

'total_cells': total_cells,

'overall_missing_pct': (missing_cells / total_cells * 100),

'missing_by_column': missing_counts.to_dict(),

'missing_pct_by_column': missing_percentages.to_dict()

}

# Create the visualization

if len(missing_percentages) > 0: # Only create plot if there are missing values

plt.figure(figsize=figsize)

# Create missing value percentage plot

ax = missing_percentages.plot(kind='barh')

# Customize the plot

plt.title('Columns missing Values', pad=20)

plt.xlabel('% Missing')

plt.ylabel('Data Column')

# Add percentage labels on the bars

for i, v in enumerate(missing_percentages):

ax.text(v + 0.5, i, f'{v:.1f}%', va='center')

# Adjust layout

plt.tight_layout()

# Create detailed missing value report

missing_report = pd.DataFrame({

'Missing Count': missing_counts,

'Missing Percentage': (missing_counts / len(df) * 100).round(2)

}).sort_values('Missing Percentage', ascending=False)

missing_report = missing_report[missing_report['Missing Count'] > 0]

# Add the report to the stats

stats['missing_report'] = missing_report

return stats

# Analyze missing data

stats = analyze_missing_data(food_rows,

figsize=(15, 6),

save_path='missing_data_analysis.png')

# Print summary

# print(f"\nMissing Data Summary:")

# print(f"Total missing values: {stats['total_missing']:,}")

# print(f"Overall missing percentage: {stats['overall_missing_pct']:.2f}%")

# # Show detailed report

# print("\nDetailed Missing Value Report:")

# print(stats['missing_report'])

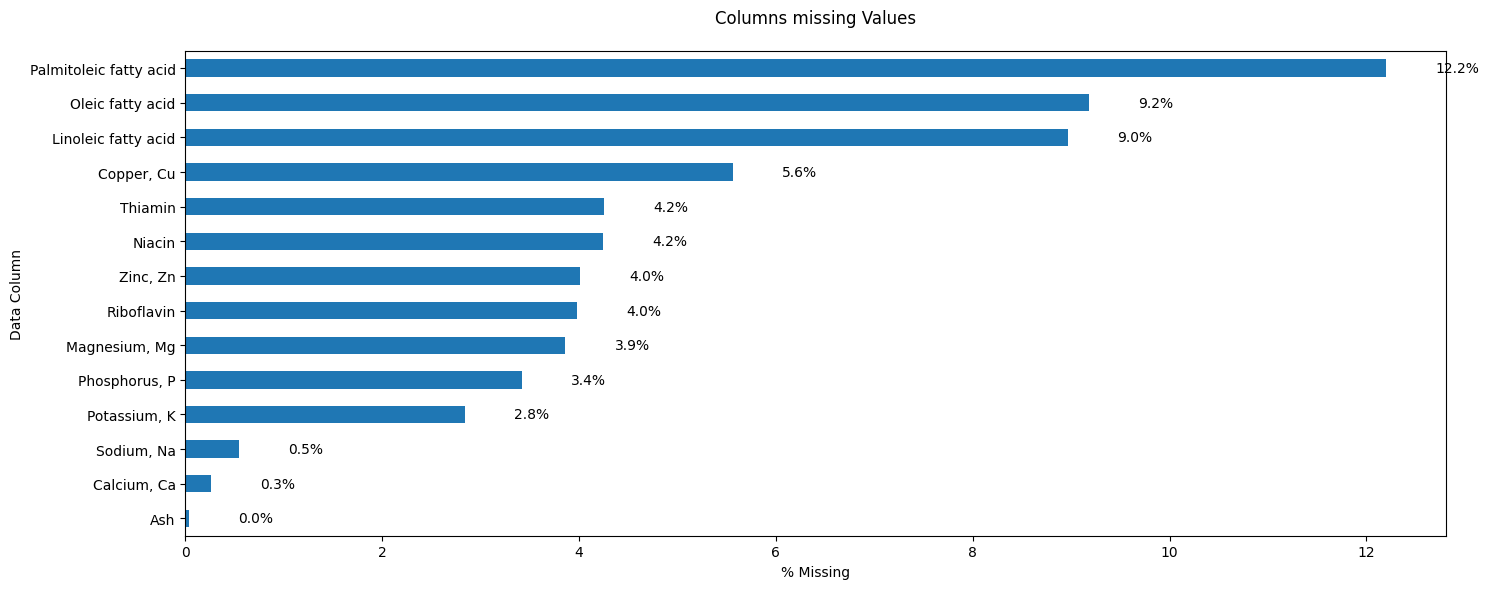

How the Missing Data Histogram compares to the Missing Data Map

Better aspects:

Precise Quantification - It shows exact percentages of missing data for each variable (e.g., 9.2% for Oleic fatty acid), which isn’t easily quantifiable from the pattern matrix

Clear Ranking - It orders variables from most to least missing data, making it immediately clear which nutrients have the biggest data quality issues

Easier Comparison - The relative magnitude of missing data between different nutrients is more easily comparable with the bar lengths

Simpler Reading - For stakeholders who just need top-level statistics, this is more accessible than the pattern matrix

Worse aspects:

Loss of Pattern Information - You can’t see if the same foods are missing multiple nutrients simultaneously, which was visible in the pattern matrix

No Row-Level Detail - It obscures whether missing data is randomly distributed across foods or concentrated in specific food types

Hidden Relationships - You can’t identify if related nutrients (like different fatty acids) tend to be missing together

Less Granular - The overall structure of missingness is compressed into a single percentage, losing the detailed pattern information

The two visualizations are best used together - the bar chart for quick insights and overall assessment, and the pattern matrix for deeper investigation of missing data relationships and patterns.

MISSING DATA IMPUTATION#

Food Group Median Imputation#

To deal with missing data we use medians within a food’s food group.

def impute_missing_data1(df, grouped_feature='food_group'):

df_imputed = df.copy()

numerical_cols = df.select_dtypes(include=['float64']).columns

for col in numerical_cols:

medians = df.groupby(grouped_feature)[col].transform('median')

df_imputed[col] = df_imputed[col].fillna(medians)

if df_imputed[col].isna().any():

overall_median = df[col].median()

df_imputed[col] = df_imputed[col].fillna(overall_median)

return df_imputed

Invoke Imputation Strategy#

imputed_food_rows = impute_missing_data1(food_rows, grouped_feature='food_group')

# imputed_food_rows = impute_missing_data2(food_rows)

# imputed_food_rows

# imputed_food_rows.info()

imputed_food_rows.shape

(7713, 22)

Verify Imputation: Invalid Values#

analyze_negative_percentages(imputed_food_rows)

No negative values found in the dataset

Verify Imputation: N/A Values#

analyze_na_percentages(imputed_food_rows)

No NA values found in the dataset

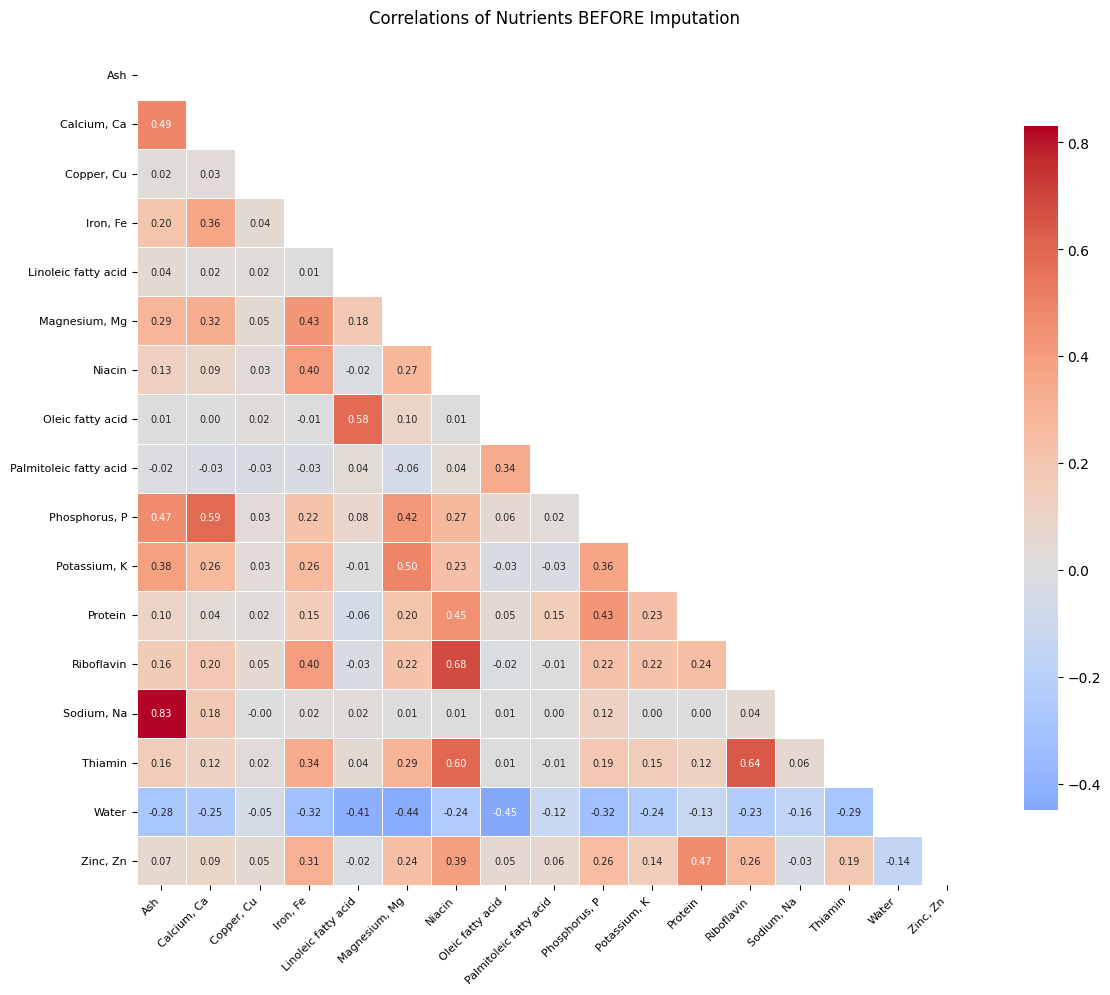

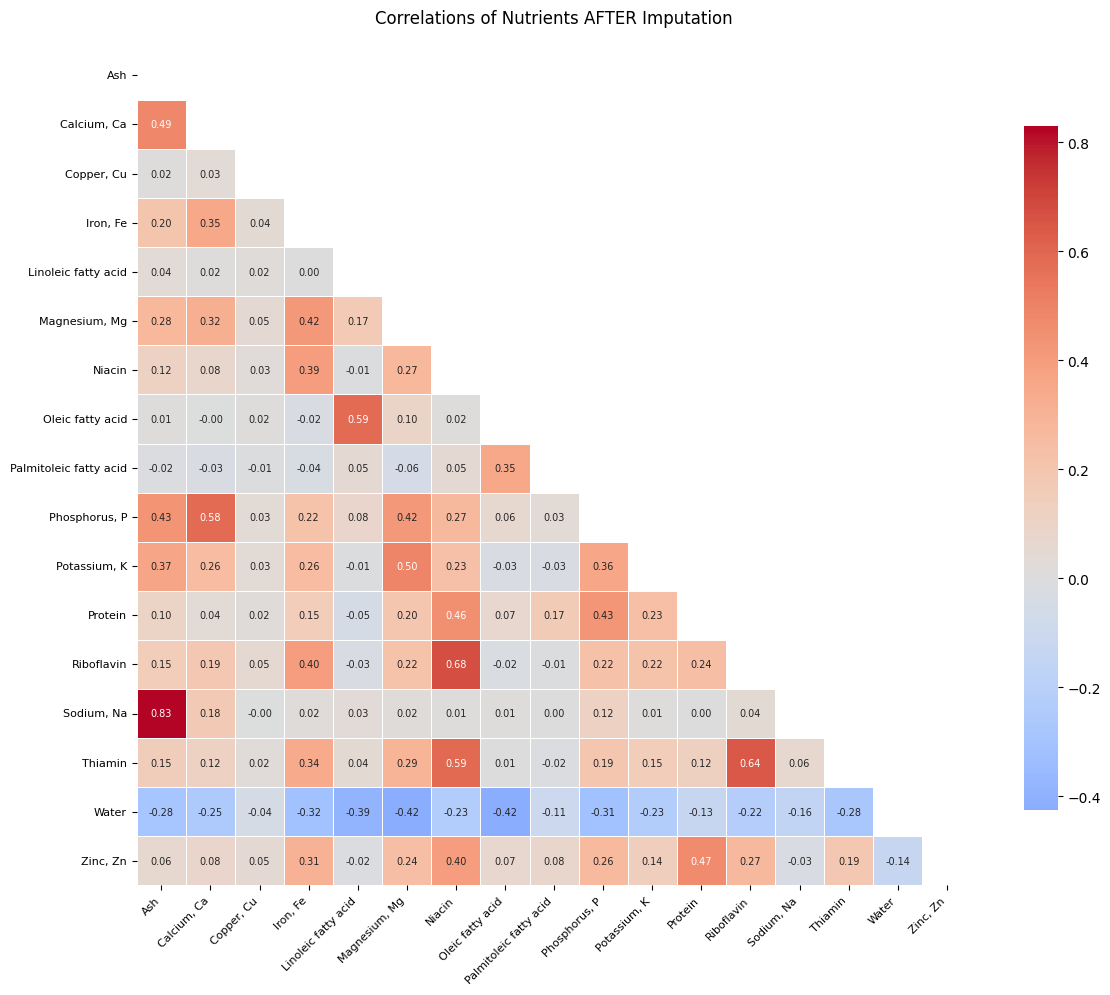

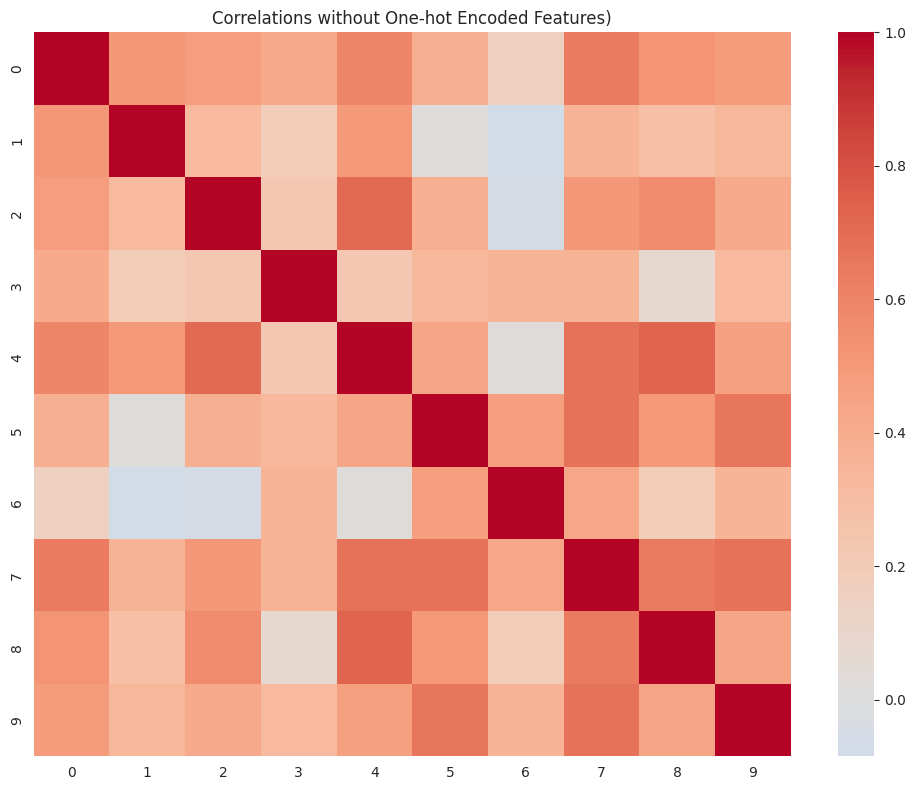

Verify Imputation: Correlations#

def plot_correlation_matrix(df,title):

# Drop non-numeric columns

numeric_df = df.select_dtypes(include=[np.number])

# Calculate correlation matrix (corr() excludes N/A values)

corr_matrix = numeric_df.corr()

# Create mask for upper triangle

mask = np.triu(np.ones_like(corr_matrix, dtype=bool))

plt.figure(figsize=(24/2, 20/2))

# Create heatmap with mask

sns.heatmap(corr_matrix,

mask=mask,

annot=True,

cmap='coolwarm',

center=0,

fmt='.2f',

square=True,

linewidths=0.5,

annot_kws={'size': 7},

cbar_kws={"shrink": .8})

plt.xticks(rotation=45, ha='right', size=8)

plt.yticks(rotation=0, size=8)

plt.title(title, pad=20, size=12)

plt.tight_layout()

plt.show()

plot_correlation_matrix(food_rows,'Correlations of Nutrients BEFORE Imputation')

plot_correlation_matrix(imputed_food_rows,'Correlations of Nutrients AFTER Imputation')

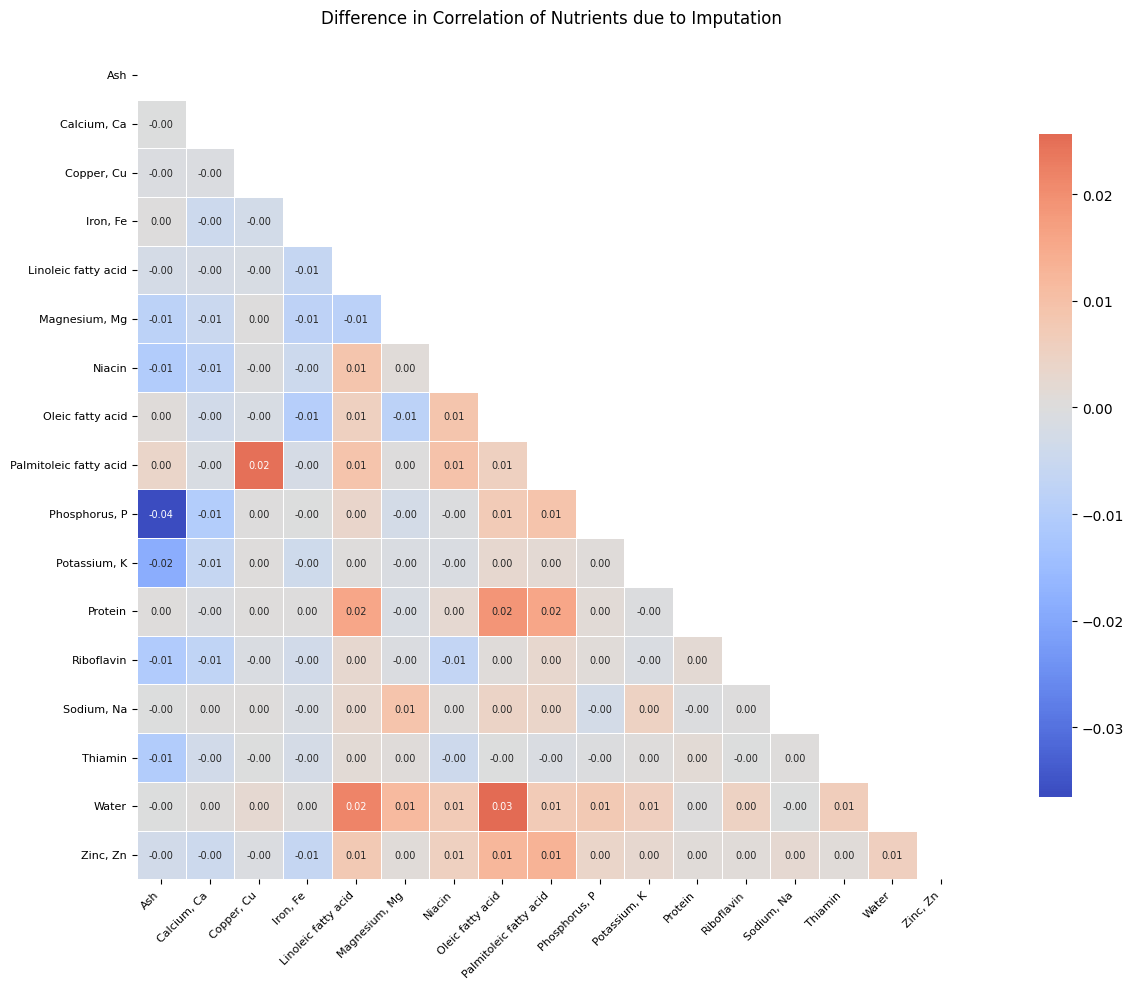

def plot_correlation_matrix_diff(df1, df2):

numeric_df1 = df1.select_dtypes(include=[np.number])

corr_matrix1 = numeric_df1.corr()

numeric_df2 = df2.select_dtypes(include=[np.number])

corr_matrix2 = numeric_df2.corr()

delta = corr_matrix2 - corr_matrix1

# Create mask for upper triangle

mask = np.triu(np.ones_like(delta, dtype=bool))

plt.figure(figsize=(15, 10))

# Create heatmap with mask

sns.heatmap(delta,

mask=mask,

annot=True,

cmap='coolwarm',

center=0,

fmt='.2f',

square=True,

linewidths=0.5,

annot_kws={'size': 7},

cbar_kws={"shrink": .8})

plt.xticks(rotation=45, ha='right', size=8)

plt.yticks(rotation=0, size=8)

plt.title('Difference in Correlation of Nutrients due to Imputation', pad=20, size=12)

plt.tight_layout()

plt.show()

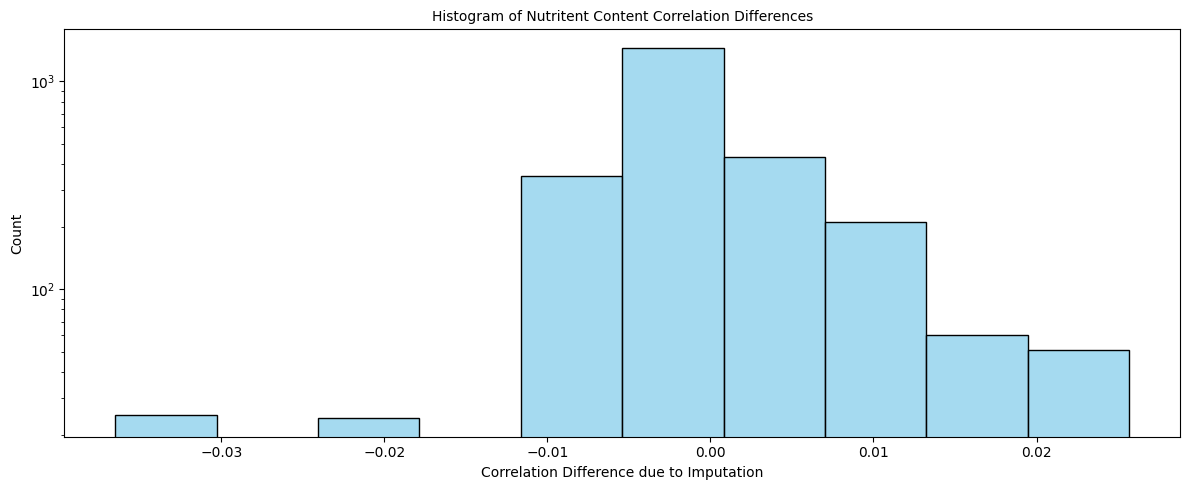

delta_values = delta[mask].values.flatten()

delta_values = delta_values[~np.isnan(delta_values)] # Remove NaN values

# plt.figure(figsize=(24/2, 20/2))

plt.figure(figsize=(12, 5))

sns.histplot(data=delta_values, bins=10, color='skyblue')

plt.title('Histogram of Nutritent Content Correlation Differences', size=10)

plt.xlabel('Correlation Difference due to Imputation')

plt.ylabel('Count')

plt.yscale('log')

plt.tight_layout()

plt.show()

# print(delta[mask])

# TODO add histogram plot of the values from delta[mask]

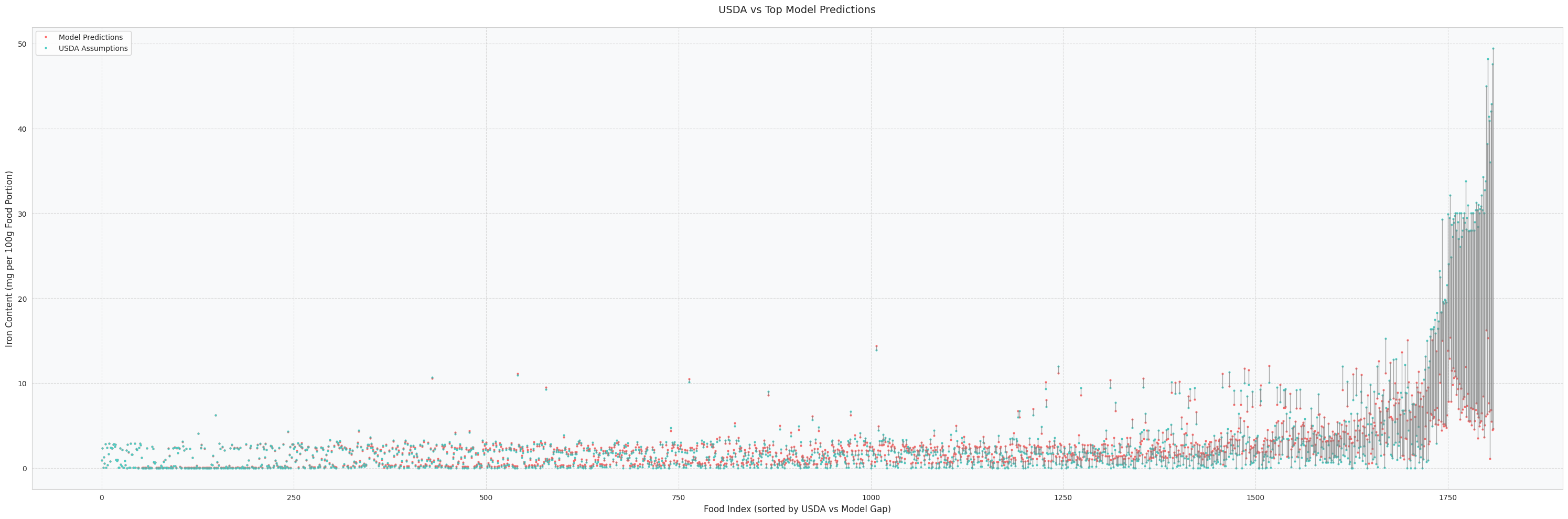

plot_correlation_matrix_diff(food_rows,imputed_food_rows )